+- Kodi Community Forum (https://forum.kodi.tv)

+-- Forum: Support (https://forum.kodi.tv/forumdisplay.php?fid=33)

+--- Forum: Tips, tricks, and step by step guides (https://forum.kodi.tv/forumdisplay.php?fid=110)

+--- Thread: Win HOW TO - Set up madVR for Kodi DSPlayer & External Players (/showthread.php?tid=259188)

HOW TO - Set up madVR for Kodi DSPlayer & External Players - Warner306 - 2016-02-08

madVR Set up Guide (for Kodi DSPlayer and Media Player Classic)

madVR v0.92.17

LAV Filters 0.74

Last Updated: Aug 04, 2019

Please inform me of any dead links as there are countless external links spread throughout this guide. It is also helpful to point out any typos you find or technical information that appears to be misstated or incorrect. It is always an option to use a description from a better and more reliable source.

Follow current madVR development at AVS Forum: Thread: Improving HDR -> SDR Tone Mapping for Projectors

New to Kodi? Try this Quick Start Guide.

What Is madVR?

How to Configure LAV Filters

This guide is an additional resource for those using Kodi DSPlayer or MPC. Set up for madVR is a lengthy topic and its configuration will remain fairly consistent regardless of the chosen media player.

Table of Contents:

- Devices;

- Processing;

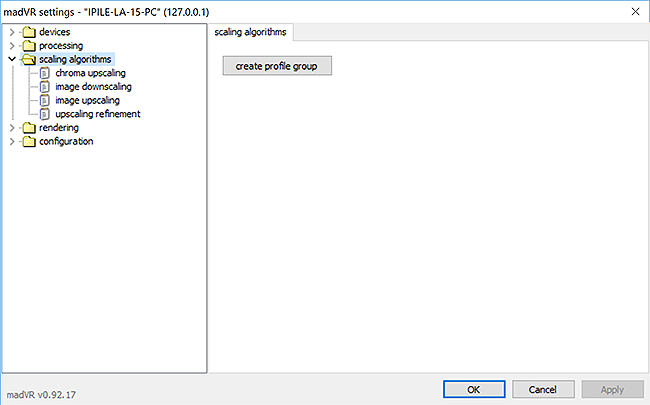

- Scaling Algorithms;

- Rendering;

- Measuring Performance & Troubleshooting;

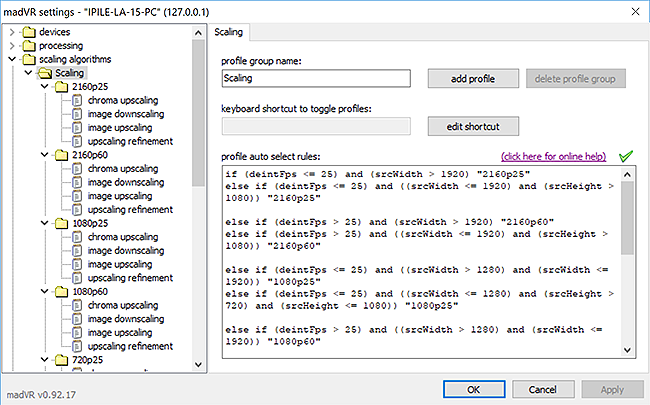

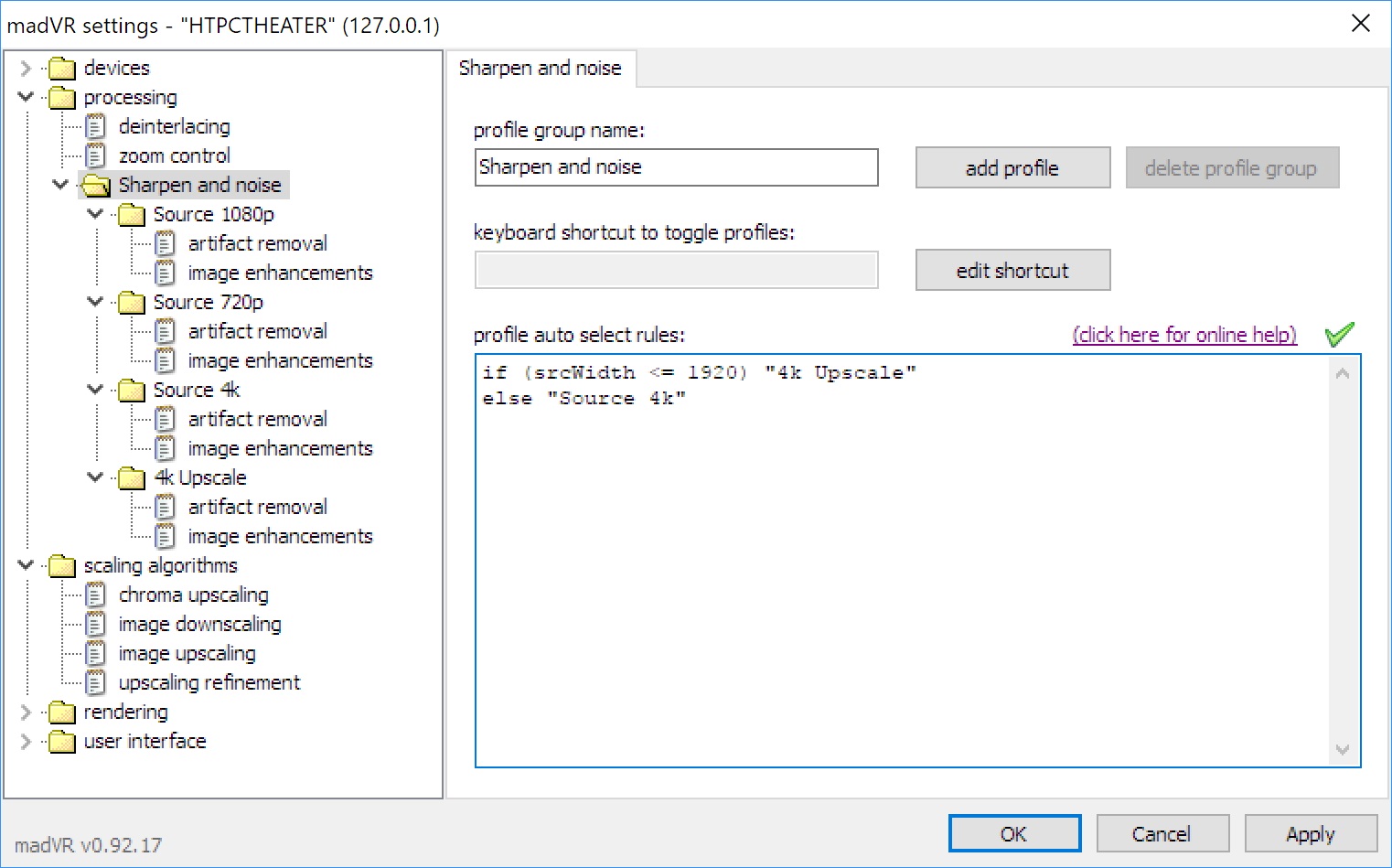

- Sample Settings Profiles & Profile Rules;

- Other Resources.

Devices

Identification, Properties, Calibration, Display Modes, Color & Gamma, HDR and Screen Config.

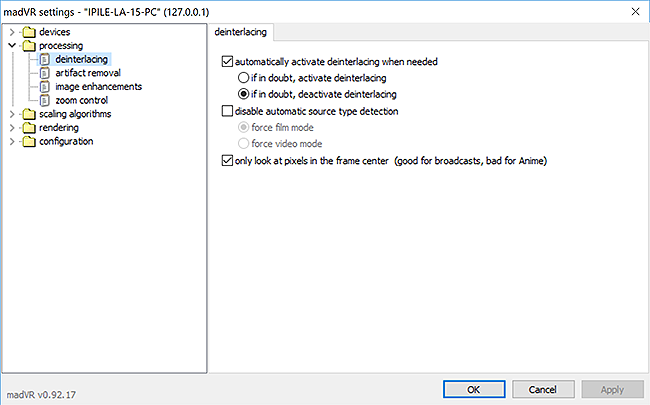

Processing

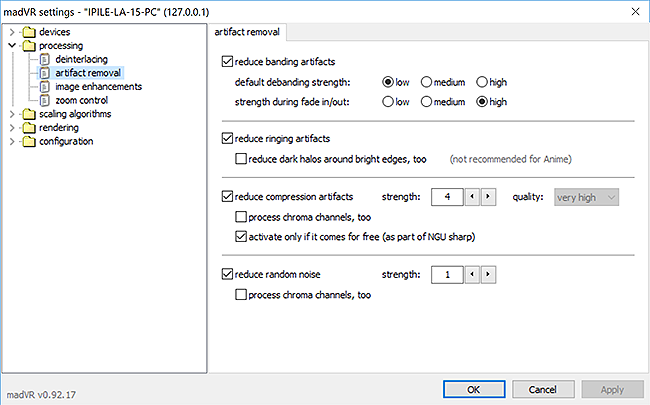

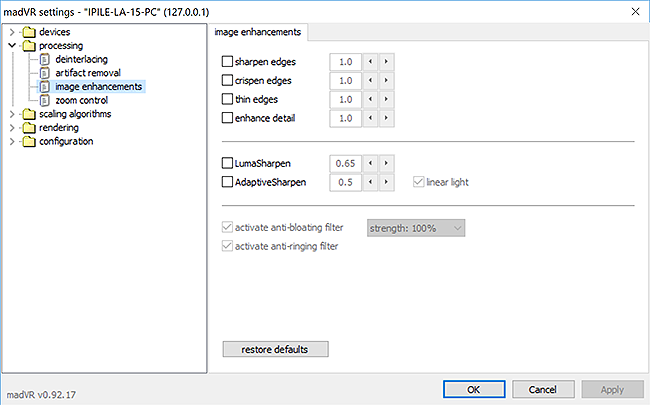

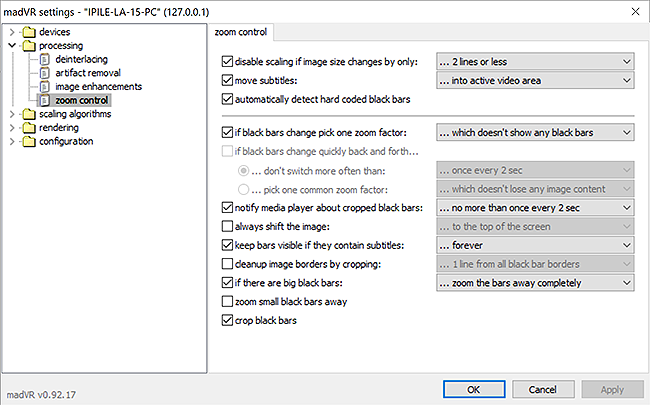

Deinterlacing, Artifact Removal, Image Enhancements and Zoom Control.

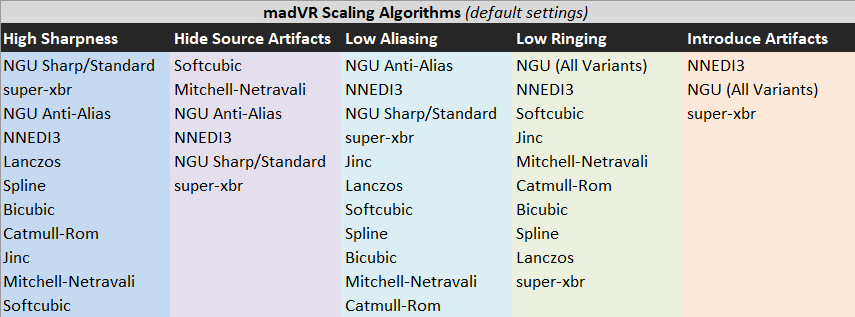

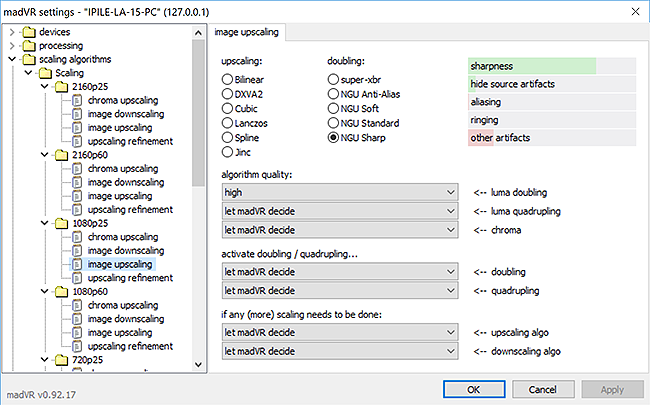

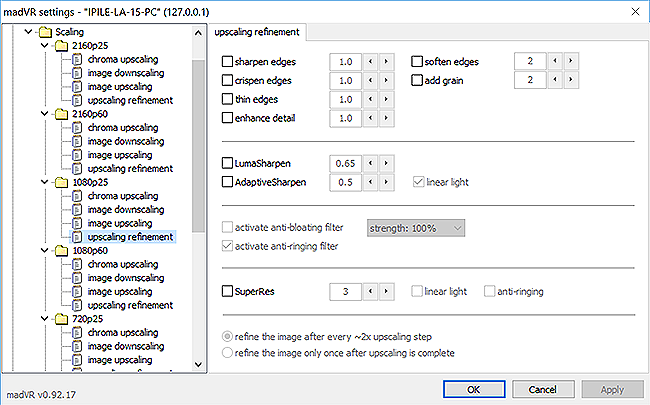

Scaling Algorithms

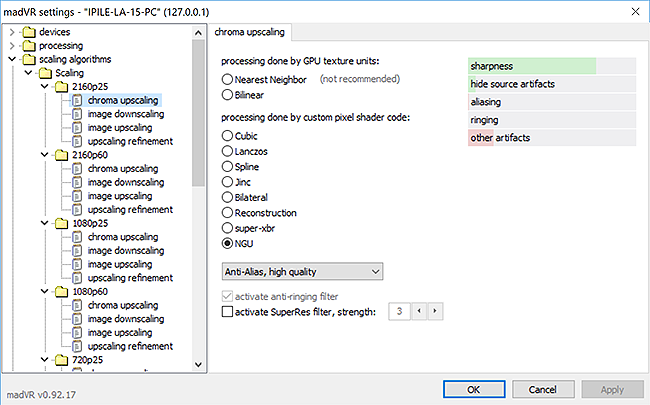

Chroma Upscaling, Image Downscaling, Image Upscaling and Upscaling Refinement.

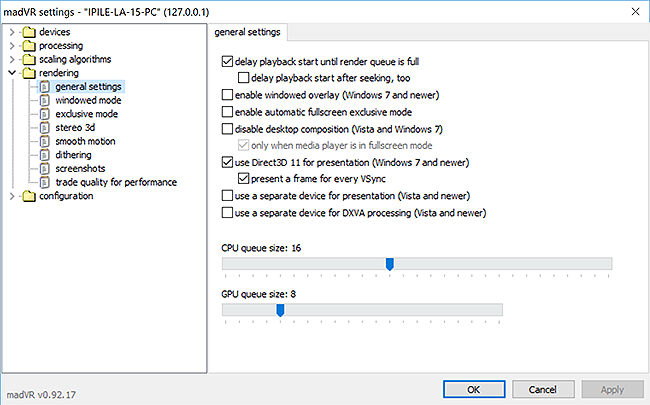

Rendering

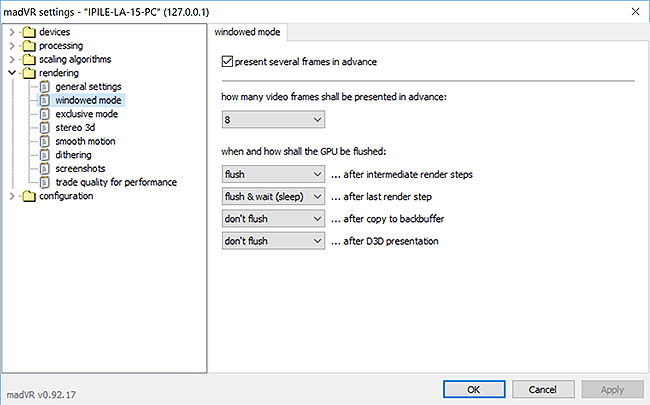

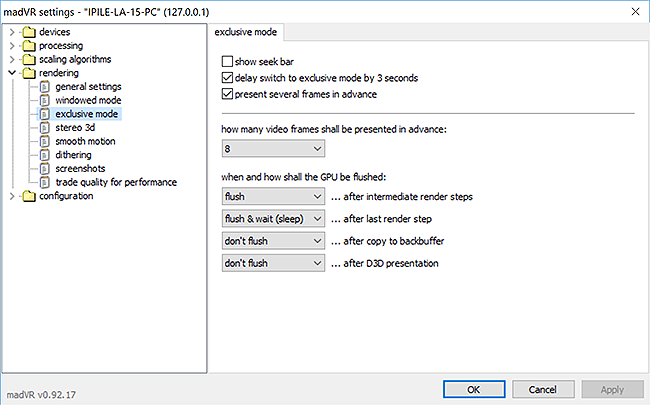

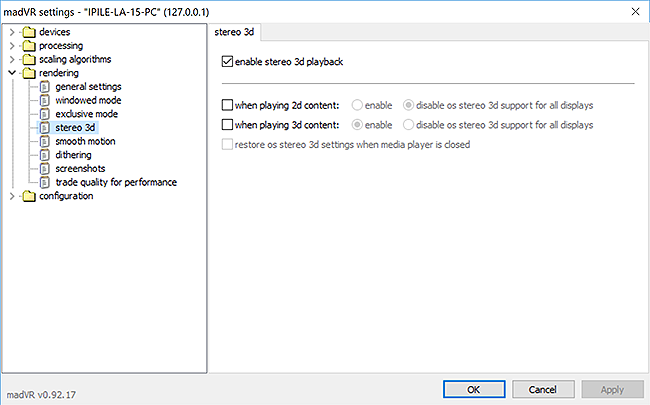

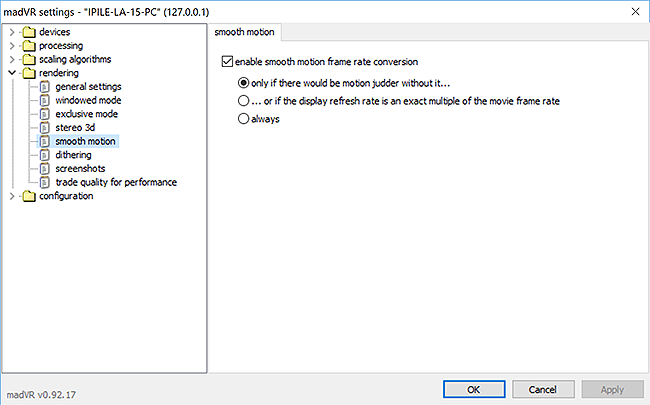

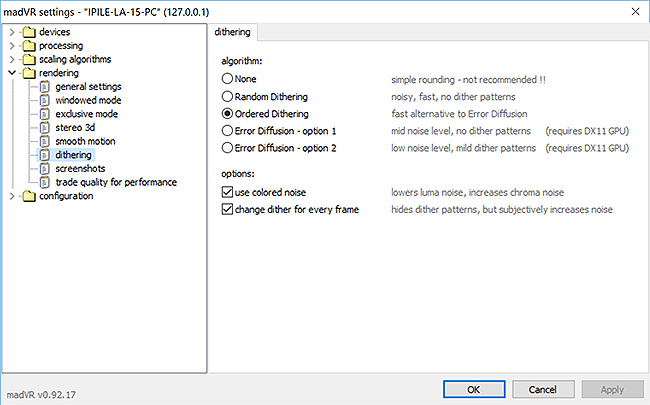

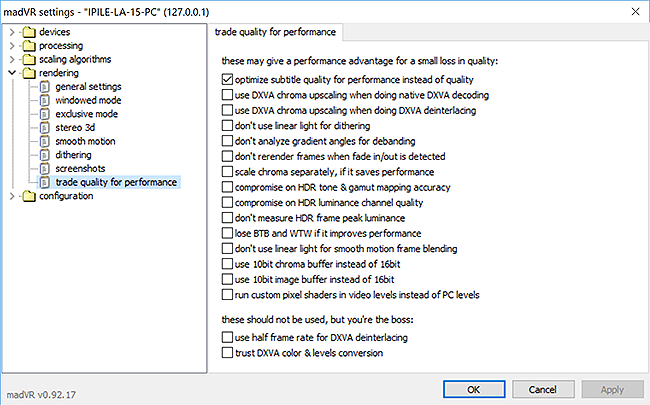

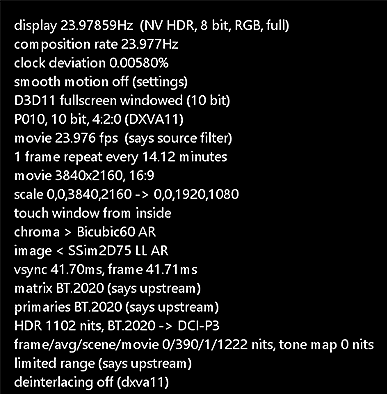

General Settings, Windowed Mode Settings, Exclusive Mode Settings, Stereo 3D, Smooth Motion, Dithering and Trade Quality for Performance.

..............

Credit goes to Asmodian's madVR Options Explained, JRiver Media Center MADVR Expert Guide and madshi for most technical descriptions.

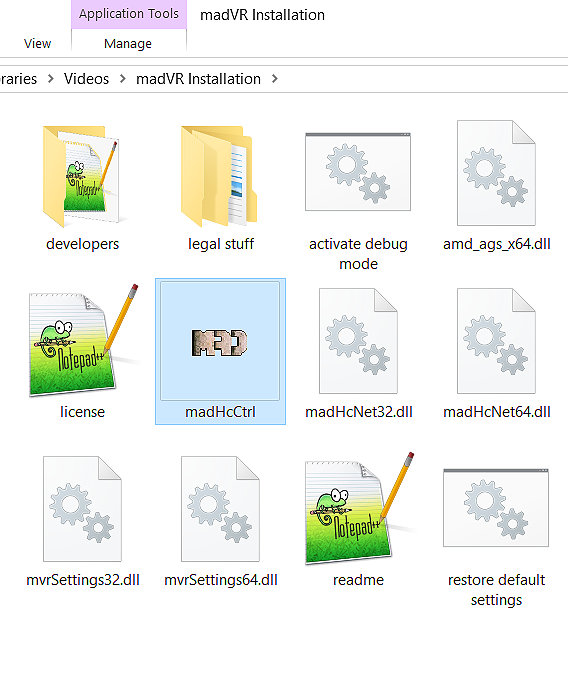

To access the control panel, open madHcCtrl in the installation folder:

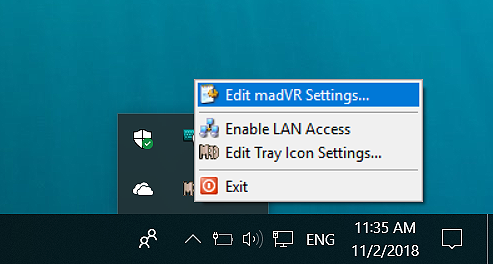

Double-click the tray icon or select Edit madVR Settings...

During Video Playback:

Ctrl + S opens the control panel. I suggest mapping this shortcut to your media remote.

..............

Resource Use of Each Setting

madVR can be very demanding on most graphics cards. Accordingly, each setting is ranked based on the amount of processing resources consumed: Minimum, Low, Medium, High and Maximum. Users of integrated graphics cards should not combine too many features labelled Medium and will be unable to use features labelled High or Maximum without performance problems.

This performance scale only relates to processing features requiring use of the GPU.

..............

GPU Overclocking

Overclocking the GPU with a utility such as MSI Afterburner can improve the performance of madVR. Increasing the memory clock speed alone is a simple adjustment that is often beneficial in lowering rendering times. Most overclocking utilities also offer the ability to create custom fan curves to reduce fan noise.

..............

Video Drivers

Most issues with madVR can be traced to changes to video drivers (e.g., broken HDR passthrough, playback stutter, 10-bit output support, color tints, etc.). Those only using a HTPC for video playback do not require frequent driver upgrades or updates. The majority of basic features such as HDR passthrough will work for many years with older drivers and frequent driver releases are solely intended to improve video game performance, not video playback performance. As such, users of HTPCs and madVR are advised to find a stable video driver that serves your needs and stick with it. It is easy to disable automatic driver updates that coincide with Windows updates and any of Intel, AMD or Nvidia provide download links to installers for legacy drivers that can be kept in case the video drivers need to be reinstalled.

..............

Image gallery of madVR image processing settings

..............

Summary of the rendering process:

Source

HOW TO - Set up madVR for Kodi DSPlayer & External Players - Warner306 - 2016-02-08

1. DEVICES

- Identification

- Properties

- Calibration

- Display Modes

- Color & Gamma

- HDR

- Screen Config

Devices contains settings necessary to describe the capabilities of your display, including: color space, bit depth, 3D support, calibration, display modes, HDR support and screen type.

device name

Customizable device name. The default name is taken from the device's EDID (Extended Display Information Data).

device type

The device type is only important when using a Digital Projector or a Receiver, Processor or Switch. If Digital Projector is selected, a new screen config section becomes available under devices.

Identification

The identification tab displays a summary of the EDID (Extended Display Information Data) that identifies any connected display devices and outlines its playback capabilities.

Before continuing on, it can be helpful to have a refresher on basic video terminology. These two sections are optional references:

Common Video Source Specifications & Definitions

Reading & Understanding Display Calibration Charts

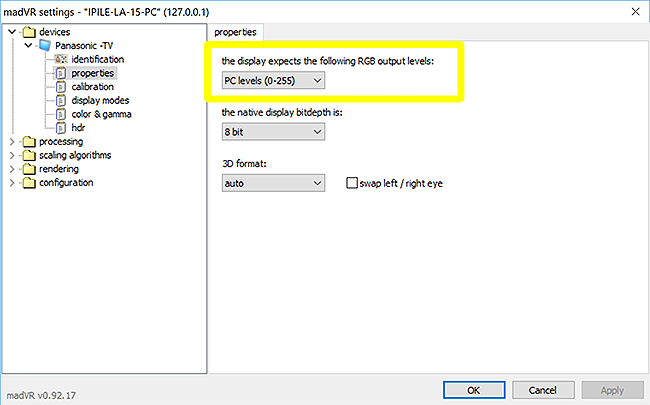

Properties – RGB Output Levels

Step one is to configure video output levels, so black and white are shown correctly.

What Are Video Levels?

PC and consumer video use different video levels. At 8-bits, video levels will be either full range RGB 0-255 (PC) or limited range RGB 16-235 (Video). Reference black starts at 0 (PC) or 16 (Video), but 16-235 video content is visually identical when displayed. The ideal output path maintains the same video levels from the media player to the display without any unwanted video levels or color space conversions. What the display does with this input is another matter...as long as black and white are the same as when they left the media player, you can't ask for much more.

Note: The RGB Output levels checkboxes in LAV Video will not impact these conversions.

Option 1:

If you just connect an HDMI cable from PC to TV, chances are you'll end up with a signal path like this:

(madVR) PC levels (0-255) -> (GPU) Limited Range RGB 16-235 -> (Display) Output as RGB 16-235

madVR expands the 16-235 source to full range RGB and it is converted back to 16-235 by the graphics card. Expanding the source prevents the GPU from clipping the levels when outputting 16-235. Both videos and the desktop will look accurate. However, it is possible to introduce banding if the GPU fails to use dithering when compressing 0-255 to 16-235. The range is converted twice: by madVR and the GPU.

This option isn’t recommended because of the range compression by the GPU and should only be used if no other suitable option is possible.

If your graphics card doesn't allow for a full range setting (like many Intel iGPUs or older Nvidia cards), then this may be your only choice. If so, it may be worth running madLevelsTweaker.exe in the madVR installation folder to see if you can force full range output from the GPU.

Option 2:

If your PC is a dedicated HTPC, you might consider this approach:

(madVR) TV levels (16-235) -> (media front-end) Use limited color range (16-235) -> (GPU) Full Range RGB 0-255 -> (Display) Output as RGB 16-235

In this configuration, the signal remains 16-235 all the way to the display. A GPU set to 0-255 will passthrough all output from the media player without clipping the levels. If a media front-end is used, it should also be configured to use 16-235 to match the media player.

When set to 16-235, madVR does not clip Blacker-than-Black (0-15) and Whiter-than-White (236-255) if the source video includes these values. Black and white clipping patterns should be used to adjust brightness and contrast until 16-235 are the only visible bars.

This can be the best option for GPUs that output full range to a display that only accepts limited range RGB. Banding should not occur as madVR handles the only conversion (YCbCr -> RGB) and the GPU is bypassed. However, the desktop and other applications will output incorrect levels. PC applications render black at 0,0,0, while the display expects 16,16,16. The result is crushed blacks. This sacrifice improves the quality of the video player at the expense of all other computing.

Option 3:

A final option involves setting all sources to full range — identical to a traditional PC and computer monitor:

(madVR) PC levels (0-255) -> (GPU) Full Range RGB 0-255 -> (Display) Output as RGB 0-255

madVR expands 16-235 to 0-255 and it is presented in full range by the display. The display's HDMI black level must be toggled to display full range RGB (Set to High or Normal (0-255) vs. Low (16-235)).

When expanding 16-235 to 0-255, madVR clips both 0-15 and 236-255, as reference black, 16, is mapped to 0, and reference white, 235, is mapped to 255. Clipping both BtB and WtW is acceptable as long as a correct grayscale is maintained. The use of black and white clipping patterns can confirm video levels (16-235) are displayed accurately.

This is usually the optimal setting for those with displays and GPUs supporting full range output (the majority of users). Both videos and the desktop will look correct and banding is unlikely as madVR handles the only required conversion. A PC must already convert from a video color space (YCbCr) to a PC color space (RGB), so the conversion of 16-235 to 0-255 is simply done with a YCbCr -> RGB conversion matrix that converts directly from limited range YCbCr to full range RGB. No additional scaling step is necessary.

Recommended Use (RGB output levels):

Banding is prevented when the GPU is set to passthrough all sources that occurs when set to RGB 0-255. Both Option 2 and Option 3 configure the GPU to 0-255. Option 3 should be considered the default option because it maintains correct output levels for all PC applications, while Option 2 only benefits video playback.

To confirm accurate video levels, it is a good idea to use some test patterns. This may require some adjustment to the display's brightness and contrast controls to eliminate any black crush or white clipping. For testing, start with these AVS Forum Black and White Clipping Patterns (under Basic Settings) to confirm the display of 16-25 and 230-235, and move on to these videos that can be used to fine-tune "black 16" and "white 235."

Discussion from madshi on RGB vs. YCbCr

How to Configure a Display and GPU for a HTPC

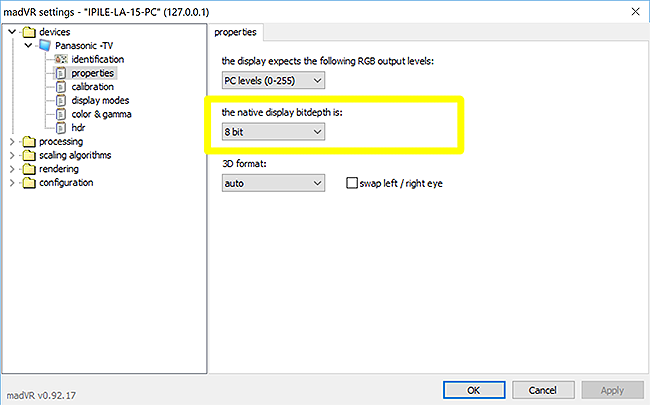

Properties – Native Display Bit Depth

The native display bit depth is the value output from madVR to the GPU. Internal math in madVR is calculated at 32-bits and the final result is dithered to the output bit depth selected here.

What Is a Bit Depth?

Every display panel is manufactured to a specific bit depth. Most displays are either 8-bit or 10-bit. Nearly all 1080p displays are 8-bit and nearly all UHD displays are 10-bit. This doesn't necessarily mean the display panel is native 8-bit or 10-bit, but that it is capable of displaying detail in gradients up to that bit depth. For example, many current UHD displays are advertised as 10-bit panels, but are actually 8-bit panels that can quickly flash two adjacent colors together to create the illusion of a 10-bit color value (known as Frame Rate Control or FRC temporal dithering — typical of many VA 120 Hz LED TVs). The odd high-end, 1080p computer monitor, TV or projector can also display 10-bit color values, either natively or via FRC. So the display either represents color detail at 8-bits or 10-bits and converts all sources to match this native bit depth.

If you want to determine if your display can natively represent a 10-bit gradient, try using this test protocol along with this gradient test image and these videos. Omit the instructions to use fullscreen exclusive mode for the test if using Windows 10.

10-bit output requires the following is checked in general settings:

- use Direct3D 11 for presentation (Windows 7 and newer)

Other required options:

- Windows 7/8: enable automatic fullscreen exclusive mode;

- Windows 10: 10-bit output is possible in both windowed mode and fullscreen exclusive mode.

If there are no settings conflicts, the output bit depth should be set to match the display's native bit depth (either 8-bit or 10-bit). Feeding a 10-bit or 12-bit input to an 8-bit display without FRC temporal dithering will lead two outcomes: low-quality dithering noise or color banding. If unsure, testing both 8-bits and 10-bits with the above linked gradient tests with and without dithering enabled can assist in determining if both look the same or one is superior.

Some factors that may force you to choose 8-bit output:

- You are unable to find any official specs for the display’s native bit depth;

- The best option for 4K UHD 60 Hz output is 8-bit RGB due to the bandwidth limitations of HDMI 2.0;

- You have created a custom resolution in madVR that has forced 8-bit output;

- Display mode switching to 12-bits at 23-24 Hz is not working correctly with certain Nvidia video drivers;

- The display has poor processing and creates banding with a 10/12-bit input even though it is a native 10-bit panel.

So is it a good idea to output a 10-bit source at 8-bits?

The answer to this depends on an understanding of madVR's processing.

A bit depth represents a fixed scale of visible luminance steps. High bit depths are used in image processing to create sources free of banding without having to manipulate the source steps. This ensures content survives the capture, mastering and compression processes without introducing any color banding into the SOURCE VIDEO.

madVR takes the 10-bit YCbCr source values and converts them to 32-bit floating point RGB data. These additional bits are not invented but available to assist in rounding from one color space to another. This high bit depth is maintained until the final processing result, which is dithered in the highest-quality possible. So the end result is a 10-bit source upconverted to 32-bits and then downconverted for display.

madVR is designed to preserve the information from its processing and the initial data provided by the YCbCr to RGB conversion to lower bit depths, so it should never introduce banding at any stage because the data is kept all the way to the final output. This all depends on the quality of the source and whether it had banding to begin with.

Color gamuts are fixed at the top and bottom. Manipulating the source bit depth will not add any new colors. You simply get more shades or steps for each color when the bit depth is increased; everything in between becomes smoother, not more colorful.

madVR can represent any output bit depth with smooth gradients by adding invisible noise to the image before output called dithering. Dithering can make most output bit depths appear nearly indistinguishable from each other by using the information from the higher source bit depth to add missing color steps to lower bit depths. Dithering replicates any missing color steps by combining available colors to approximate the missing color values. This creates a random or repetitive offset pattern at places where banding would otherwise occur to create smooth transitions between every color shade. The higher the output bit depth, the more invisible any noise created by dithering and the dithering pattern itself becomes. By the time the bit depth is increased to 8-bits, the dithering pattern becomes so small that 8-bit color detail and 10-bit (or higher) color detail will appear virtually identical to the human eye. This is why many 8-bit FRC display panels still exist in the display market that employ high-quality dithering to display 10-bit videos.

There is an argument that when capturing something with a digital camera there is no value in using 10-bits if the noise captured by the camera is not below a certain threshold (the signal-to-noise ratio). If it is above this threshold, then the dithering added at 8-bits will be indiscernible from the noise captured at 10-bits. That is really what you are measuring when it comes to bit depths as high as 8-bits: detectable dithering noise. If dithering noise is not detectable, then an 8-bit panel is an acceptable way to show 10-bit content. Dithering noise can be particularly hard to detect at 4K UHD resolutions, especially using madVR's low-noise dithering algorithms.

Take a look at these images that show the impact of dithering to a bit depth as low as 2-bits:

Dithering - 8-bits (16.8 million color shades) to 2-bits (64 color shades):

2 bit Ordered Dithering

2 bit No Dithering

*Best viewed at 100% browser zoom for the dithering to look most accurate.

Seems remarkable? As the bit depth is increased, the shading created by dithering becomes more and more seamless to the point where the output bit depth becomes somewhat unimportant as gradients will always remain smooth without introducing any color banding not found in the source values.

Dithering is designed to spread out and erase any quantization (digital rounding) errors, so it is not designed to remove banding from the source video. Rather, if the source is free of banding, that information can always be maintained faithfully at lower display bit depths with dithering.

Recommended Use (native display bitdepth):

Those with native 8-bit displays should stick with 8-bit output, as the additional detail of higher bit depths cannot be represented by the display panel and will only result in added image noise. On the other hand, those with 10-bit displays have a choice between either 8-bit or 10-bit output, with each providing nearly identical image quality due to the use of madVR's excellent dithering algorithms. The high bit depths used for image processing will prevent any loss of color detail from the source video to bit depths of 8-10 bits when the final 16-bit processing result is dithered to the output bit depth (with any remaining differences masked by the blending of colors created by these higher bit depths).

While 10-bit output could be considered the default option for a native 10-bit display panel, simply setting madVR and the GPU to 8-bit RGB can greatly simplify HTPC configuration for HDMI 2.0 devices. There are some common issues that can be encountered when the GPU is set to output 10 or 12-bits. For one, display mode switching from 8-bit RGB @ 60 Hz to 12-bit RGB @ 23-24 Hz is finicky with Nvidia video drivers and sometimes the video driver won't switch correctly from 8-bits to 12-bits. HDMI 2.0 limits 60 Hz 4K UHD output to 8-bit RGB, and RGB output is always preferred over YCbCr on a PC. Two, Nvidia's API for custom resolutions is locked to 8-bits, so Nvidia users needing a custom resolution must use 8-bits. Three, certain GPU drivers are known to create color banding when set to output 10 or 12-bits and 8-bit output can avoid any banding. In each of these cases, 8-bit output would be preferred. Those using madVR for the first time may not be accustomed to a video renderer that uses dithering, but it should be stated again: both 8-bit and 10-bit output offer virtually indistinguishable visual quality as a result of high-quality dithering added to all bit depths.

When the bit depth is set below the display's native bit depth, the only visual change occurs in the noise floor of the image, and this subtlety can be invisible. Setting madVR to 8-bits might even be beneficial for some 10-bit displays (like some LG OLEDs). Providing the display with a good 8-bits as opposed to 10 or 12-bits can sometimes make for less work for the display and a reduced chance of introducing quantization errors. The odd UHD display may struggle with high bit depths due to the use of low bit depths for its internal video processing, not applying dithering correctly when converting 12-bits to 10-bits or some other unknown display deficiency. This is not meant to discourage anyone from choosing 10-bit output; the highest bit depth should produce the highest perceived quality, but your eyes are often the best judge of what bit depth works best for the display.

Regardless of output bit depth, it is advised to check if the GPU or display processing is adding any color banding to the image by using a good high-bit depth gradient test image (such as those linked above). Other good tests for color banding include scenes with open blue skies and animated films with large patches of blended color shades.

Determining Display-Panel Bit Depth

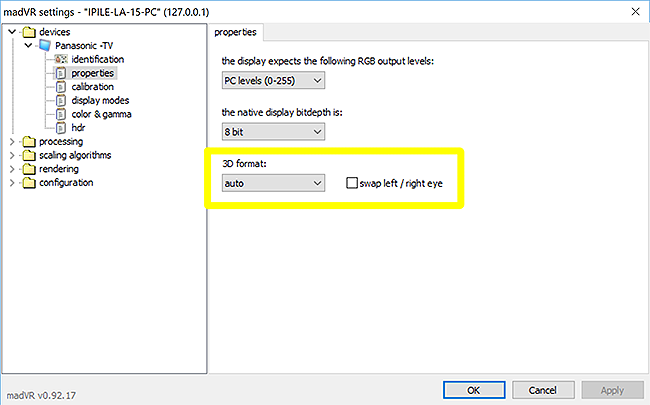

Properties – 3D Format

3D support in madVR is limited to MPEG4-MVC 3D Blu-ray. MVC 3D mkvs can be created from frame packed 3D Blu-rays with software such as MakeMKV.

The input 3D format must be frame packed MPEG4-MVC. The output format depends on the operating system, HDMI spec and display type. 3D formats with the left and right images on the same frame will be sent out as 2D images.

3D playback requires four ingredients:

- enable stereo 3d playback is checked in the madVR control panel (rendering -> stereo 3d);

- A 3D video decoder is used (e.g., LAV Filters 0.68+ with 3D software decoder installation checked);

- A 3D-capable display is used (with its 3D mode enabled);

- Windows 8.1 or Windows 10 is used as the operating system.

In addition, it may be necessary to check enable automatic fullscreen exclusive mode in general settings if MPEG4-MVC videos play in 2D rather than 3D.

Stereoscopic 3D is designed to capture separate images of the same object from slightly different angles to create an image for the left eye and right eye. The brain is able to combine the two images into one, which leads to a sense of enhanced depth.

What Is the Difference Between an Active 3D TV and Passive 3D TV?

auto

The default output format is frame packed 3D Blu-ray. The output is an extra-tall (1920 x 2205 - with padding) frame containing the left eye and right eye images stacked on top of each other at full resolution.

auto – (Windows 8+, GPU - HDMI 1.4+, Display - HDMI 1.4+): Receives the full resolution, frame packed output. On an active 3D display, each frame is split and shown sequentially. A passive 3D display interweaves the two images as a single image.

auto – (Windows+, GPU - HDMI 1.3, Display - HDMI 1.3): Receives a downconverted, half side-by-side format. On an active 3D display, each frame is split, upscaled and shown sequentially. A passive 3D display upscales the two images and then combines them as a single frame.

The above default behavior can be overridden by converting the frame packed source to any format that places the left eye and right eye images on the same frame. These 2D formats function without active GPU stereoscopic 3D and are compatible with all Windows versions and HDMI specifications.

Force 3D format below:

side-by-side

Side-by-side (SbS) stacks the left eye and right eye images horizontally. madVR outputs half SbS, where each eye is stored at half its horizontal resolution (960 x 1080) to fit on one 2D frame. The display splits each frame and scales each image back to its original resolution.

An active 3D display shows half SbS sequentially. Passive 3D displays will split the screen into odd and even horizontal lines. The left eye and right eye odd sections are combined. Then the left eye and right eye even sections are combined. This weaving creates the perception of two seperate images.

top-and-bottom

Top-and-bottom (TaB) stacks the left eye and right eye images vertically. madVR outputs half TaB, where each eye is stored at half its vertical resolution (1920 x 540) to fit on one 2D frame. The display splits each frame and scales each image back to its original resolution.

An active 3D display shows half TaB sequentially. Passive 3D displays will split the screen into odd and even horizontal lines. The left eye and right eye odd sections are combined. Then the left eye and right eye even sections are combined. This weaving creates the perception of two seperate images.

line alternative

Line alternative is an interlaced 3D format designed for passive 3D displays. Each frame contains a left odd field and right odd field. The next frame contains a left even field and right even field. 3D glasses make the appropriate lines visible for the left eye or right eye. For line alternative to function, the display must be set to its native resolution without any visible over or underscan.

column alternative

Column alternative is another interlaced 3D format similar to line alternative, except the frames are matched vertically as opposed to horizontally. This is another passive 3D format. One frame contains a left odd field and right odd field. The next frame contains a left even field and right even field. 3D glasses make the appropriate lines visible for the left or right eye. The display must be set to its native resolution without any visible over or underscan.

Further Detail on the Various 3D Formats

swap left / right eye

Swaps the order in which frames are displayed. This can correct the behavior of some displays that show the left eye and right eye images in the incorrect order. Incorrect eye order can be fixed for all formats, including line and column alternative. Many displays can also swap the eye order in its picture menus.

3D glasses must be synchronized with the display before playback. If the image appears blurry (particularly, the background elements), your 3D glasses are likely not enabled.

Recommended Use (3D format):

AMD and Intel users can safely set 3D format to auto. When functioning correctly, stereoscopic 3D should trigger in the GPU control panel at playback start and the display's 3D mode should takeover from there. Nvidia, on the other hand, no longer offers support for MVC 3D in its official drivers. Nvidia's official support for 3D playback ended with driver v425.31 (April 11, 2019) and only the 18 series drivers are to receive legacy updates and patches to keep MVC 3D operable with current Windows builds (recommended: v385.28 or v418.91). Nvidia 3D Vision that enables stereoscopic 3D is incompatible with the newest drivers and manual installation of 3D Vision will not provide any added functionality.

Manual Workaround to Install 3D Vision with Recent Nvidia Drivers

Users of Nvidia drivers after v425.31 must convert MVC 3D to a two-dimensional 3D format (where both 3D images are reduced in resolution and combined into a single frame) using any of the supported 3D formats listed under 3D format. Then 3D content can be passed through to the display without any need for active GPU stereoscopic 3D. The display's user manual should be consulted for a list of supported 3D formats.

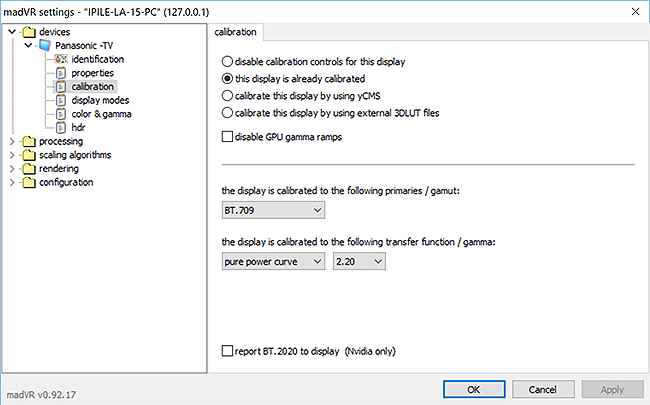

Calibration

When doing any kind of gamut mapping or transfer function conversion, madVR uses the values in calibration as the target. This requires you know your display's calibrated color gamut and gamma curve and attach any available yCMS or 3D LUT calibration files.

What Is a Color Gamut?

Most 4K UHD displays have separate display modes for HDR and SDR. Calibration settings in madVR only apply to the display's default SDR mode. BT.2020 HDR content is passed through unless a special setting in hdr is enabled such as converting HDR to SDR.

disable calibration controls for this display

Turns off calibration controls for gamut and transfer function conversions.

If you purchased your display and went through only basic calibration without any knowledge of its calibrated gamma or color gamut, this is the safest choice.

Turning off calibration controls defaults to:

- primaries / gamut: BT.709

- transfer function / gamma: pure power curve 2.20

this display is already calibrated

This enables calibration options used to map content with a different gamut than the calibrated display color profile. For example, a BT.2020 source, such as an UHD Blu-ray, may need to be mapped to the BT.709 color space of an SDR display, or a BT.709 source could be mapped to an UHD display calibrated to BT.2020. Displays with an Automatic color space setting can select the appropriate color profile to match the source, but all other displays require the input gamut matches the calibrated gamut to track the color coordinates correctly and prevent any over or undersatuation. madVR should convert any source gamut that doesn’t match the calibrated gamut.

If you want to use this feature but are unsure of how your display is calibrated, try the following values that are most common.

1080p Display:

- primaries / gamut: BT.709

- transfer function / gamma: pure power curve 2.20

4K UHD Display:

- primaries / gamut: BT.709 (Auto/Normal) / BT.2020 (Wide/Extended/Native)

- transfer function / gamma: pure power curve 2.20

Note: transfer function / gamma is only used if enable gamma processing is checked under color & gamma. Gamma processing is unnecessary as madVR will always use the same gamma as the encoded source mastering monitor. The transfer function is only applied by default for the conversion of HDR to SDR because madVR must convert a PQ HDR source to match the known calibrated SDR gamma of the display.

HDR to SDR Instructions: Mapping Wide Color Gamuts | Choosing a Gamma Curve

calibrate this display by using yCMS

Medium Processing

yCMS and 3DLUT files are forms of color management that use the GPU for gamut and transfer function correction. yCMS is the simpler of the two, only requiring a few measurements with a colorimeter and appropriate software. This a lengthy topic beyond the scope of this guide.

yCMS files can be created with use of HCFR. If you are going this route, it may be better to use the more accurate 3D LUT.

calibrate this display by using external 3DLUT files

Medium - High Processing

Display calibration software such as ArgyllCMS/DisplayCal, CalMAN or LightSpace CMS is used along with madVR to create up to a 256 x 256 x 256 3D LUT.

A 3D LUT (3D lookup table) is a fast and automated form of display calibration that uses the GPU to produce corrected color values for sophisticated grayscale, transfer function and primary color calibration.

What Is a 3D LUT?

Display calibration software, a colorimeter and a set of test patterns are used to create 3D LUTs. madTPG.exe (madVR Test Pattern Generator) found in the madVR installation folder provides all the necessary patterns. Using hundreds or thousands of color patches, the calibration software assesses the accuracy of the display before calibration, calculates necessary corrections and assesses the performance of the display with those corrections enabled. An accurate calibration can be achieved in as little as 10 minutes.

Manni's JVC RS2000 Before Calibration | Manni's JVC RS2000 After a 10 Minute 3D LUT Calibration

Source

Display calibration software will generate .3dlut files that can be attached from madVR as the calibration profile for the monitor. Active 3D LUTs are indicated in the madVR OSD. A special split screen mode (Ctrl + Alt + Shift + 3) is available to show the unprofiled monitor on one side of the screen and the corrections provided by the 3D LUT on the other.

Multiple 3D LUTs can be used to correct the individual color space of each source, or a single 3D LUT that matches the display's native color gamut can be used to color correct all sources. HDR 3D LUTs are added from the hdr section.

Common Display Color Gamuts: BT.709, DCI-P3 and BT.2020.

Instructions on how to generate and use 3D LUT files with madVR are found below:

ArgyllCMS | CalMAN | LightSpace CMS

disable GPU gamma ramps

Disables the default GPU gamma LUT. This will return to its default when madVR is closed. Using a windowed overlay means this setting only impacts madVR. 3D LUTs typically include calibration curves that ignore the GPU hardware gamma ramps, so this setting is unnecessary and will have no effect.

Enable if you have installed an ICC color profile in Windows Color Management. madVR cannot make use of ICC profiles.

report BT.2020 to display (Nvidia only)

Allows the gamut to be flagged as BT.2020 when outputting in DCI-P3. Can be useful in situations where a display or video processor requires or expects a BT.2020 container, but DCI-P3 output is preferred.

Recommended Use (calibration):

Even if you are uncertain of the display's color gamut and gamma setting, it is worth choosing this display is already calibrated and guessing the display's SDR calibration. You then have quick access to madVR's calibration options in the future if you need to adjust something. This is especially true if you are playing any HDR content with tone map HDR using pixel shaders selected under hdr. Some adjustment of the gamma curve and/or color gamut from madVR are usually required to get the best results for both SDR and HDR.

Color calibrating a display with a 3D LUT file is one of madVR's most impactful features. There is no need to invest in costly PC software to create a 3D LUT. Free display calibration software such as DisplayCAL and ArgyllCMS are available that are supplemented with online help documentation and active support forums. Creating a 3D LUT is a much easier process than manual grayscale calibration with often superior results. A display calibrated with an accurate grayscale and gamma tracking benefits from more natural images with improved picture depth. 3D LUTs make this kind of pinpoint accurate display calibration accessible to anyone without any specialized training or knowledge of calibration beyond access to an accurate colorimeter.

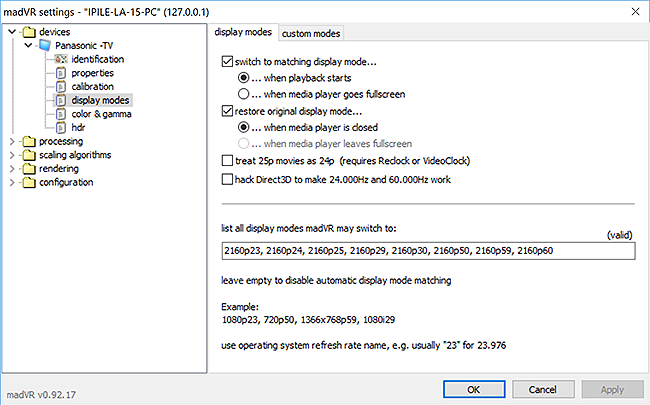

Display Modes

display modes matches the display refresh rate to the source frame rate. This ensures smooth playback by playing sources such as 23.976 frame per second video at a matching refresh rate or multiple of the source frame rate (e.g., 23.976 Hz from the GPU and 120 Hz — 23.976 x 5 — at the display). Conversely, playing 23.976 fps content at 60 Hz presents a mismatch — the frame frequencies do not align — artificial frames are added by 3:2 pulldown that creates motion judder. The goal of display modes is to eliminate motion judder caused by mismatched frame rates.

What Is 24p Judder?

Enter all display modes (refresh rates) supported by your display into the blank textbox. At the start of playback, madVR will switch the GPU and by extension the display to output modes that best match the source frame rate.

Available display refresh rates for the connected monitor can be found in Windows Settings:

- Right-click on the desktop and select Display settings;

- Click on Advanced display settings;

- Click on Display adapter properties;

- Select the Monitor tab;

- Screen refresh rate will display all compatible refresh rates for the monitor under the drop-down.

Ideally, a GPU and display should be capable of the most common video source refresh rates:

- 23.976 Hz

(23 Hz in Windows)

- 24 Hz

(24 Hz in Windows)

- 25 Hz

(25 Hz / 50 Hz in Windows)

- 29.97 Hz

(29 Hz / 59 Hz in Windows)

- 30 Hz

(30 Hz / 60 Hz in Windows)

- 50 Hz

(50 Hz in Windows)

- 59.94 Hz

(59 Hz in Windows)

- 60 Hz

(60 Hz in Windows)

madVR recognizes display modes by output resolution and refresh rate. You only need to output to one resolution for all content, which includes 1080p 3D videos, to ensure all sources are upscaled by madVR to the same native resolution of the display.

To cover all of the refresh rates above, eight entries are needed:

1080p Display: 1080p23, 1080p24, 1080p25, 1080p29, 1080p30, 1080p50, 1080p59, 1080p60

4K UHD Display: 2160p23, 2160p24, 2160p25, 2160p29, 2160p30, 2160p50, 2160p59, 2160p60

In most cases, the display will refresh the input signal at a multiple of the source frame rate (29.97 fps x 2 = 59.94 Hz). Frame interpolation of any kind is avoided so long as the two refresh rates are exact multiples.

treat 25p movies as 24p (requires ReClock or VideoClock)

Check this box to remove PAL Speedup common to PAL region (European) content. madVR will slow down 25 fps film by 4.2% to its original 24 fps. Requires the use of an audio renderer such as ReClock or VideoClock (JRiver Media Center) to slow the down the audio by the same amount.

hack Direct3D to make 24.000Hz and 60.000Hz work

madVR Explained: A hack to Direct3D that enables true 24 and 60 Hz display modes in Windows 8.1 or 10 that are usually locked to 23.976 Hz and 59.940 Hz. May cause presentation queues to not fill.

Note on 24p Smoothness:

When playing videos with a native frame rate of 24 fps (such as most film-based content), it may be possible to see some visible stutter in panning shots when the source is played at its native refresh rate (24p). This stutter is due to the low frame count of the video. The human eye can easily discern frame rates higher than 60 Hz (perhaps even as high as 500 Hz), so low frame rates will be visible to the human eye in motion and are no different than watching the same source at a commercial theatre. If you want to simulate the low motion of 24 fps sources, try switching the GPU to 23 Hz and moving the mouse cursor around.

Motion interpolation can improve the fluidity of 24 fps content, but will introduce a noticeable and unwanted soap-opera effect. True 24 fps playback at a matching refresh rate (usually with 5:5 pulldown), even with small amounts of stutter or blur, remains the best way to accurately view film-based content.

What Is Motion Interpolation?

Recommended Use (display modes):

Refresh rate matching should be considered a default setting for a smooth playback experience. Use of any type of frame interpolation goes against the creator's intent and most often leads to temporal artifacts that are avoided with native playback at a matching refresh rate. The primary concern of display mode switching is avoiding 3/2 pulldown judder for 24 fps content (24p@23-24 Hz, and not 24p@60 Hz). If your display does not support refresh rate switching, consider enabling smooth motion in madVR (under rendering) to remove any judder.

When entering display modes, you may selectively choose which ones are used. For example, 8-bit RGB output may not need smaller refresh rates like 2160p25 when 2160p50 is entered (as 25p x 2 = 50p). Remember that refresh rates of 30 Hz and below are required for 4K 10-bit, RGB output.

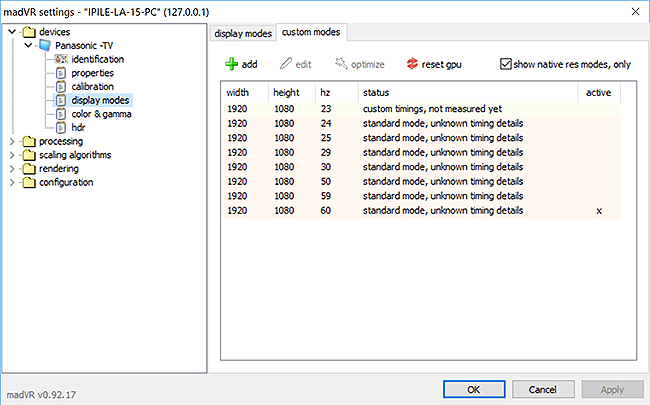

Custom Modes

This is actually a second tab under display modes. This is for users who do not want to use ReClock or other similar audio renders to correct clock jitter that can result in dropped or repeated frames every few minutes with many graphics cards. Generally, this is anyone who is bitstreaming rather than decoding to PCM. The goal is to reduce or eliminate the dropped/repeated frames counted by madVR.

What Is Clock Jitter?

madVR Explained:

Only custom timings can be optimized but simply editing a mode and applying the "EDID / CTA" timing parameters creates a custom mode and is the recommended way to start optimizing a refresh rate. New timing parameters must be tested before they can be applied. Delete replaces the add button when selecting a custom mode. It uses each of GPU vendor's private APIs to add these modes and does not work with EDID override methods like CRU; supports AMD, Intel and Nvidia GPUs. With Nvidia, these custom modes can only be set to 8-bit, but 10 or 12-bit output is still possible if the GPU is already using a high bit depth before switching to the custom resolution.

SimpleTutorial: How to Create Custom Modes

Detailed Tutorial: How to Create Custom Modes

Recommended Use (custom modes):

AMD tends to minimize any clock jitter with factory frame repeats or drops at intervals of an hour or more. So custom resolutions are typically only of concern to Nvidia users. Because they are so brief and infrequent, most will never notice these occasional frame drops or repeats. Many have been living with them for years without ever perceiving any playback oddities. However, the automated creation of custom resolutions offered by madVR can make custom modes worth trying, provided you are willing to accept forced 8-bit output from the GPU and the need to repeat this process any time the video drivers are upgraded or reinstalled. Be warned that Nvidia's custom resolution API is buggy and can cause stability issues with refresh rate switching and tends to break regularly with driver updates. Trial-and-error can be involved with different drivers to get a display to accept a custom resolution.

CRU (Custom Resolution Utility) is a more reliable but less user-friendly method to create a custom resolution. CRU supports 12-bit custom resolutions with functioning display mode switching that survives a reboot of the operating system. The recommended method of using CRU is to first calculate an automated custom resolution with madVR, take a Print Screen of madVR's calculated values and enter those values into CRU. Unlike the buggy Nvidia API, CRU doesn't use the GPU vendor APIs and instead creates custom resolutions at the operating system-level.

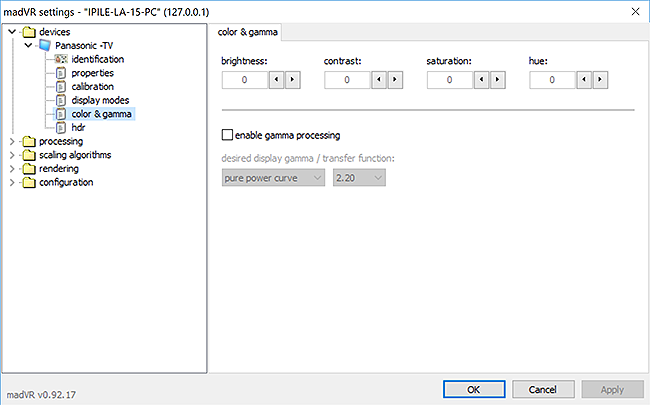

Color & Gamma

Color and transfer function adjustments do not need to be used unless you are unable to correct an issue using the calibration controls of your display.

enable gamma processing

This option works in conjunction with the gamma set in calibration. The value in calibration is used as the base that madVR uses to map to a chosen gamma below. A gamma must be set in calibration for this feature to work.

Most viewing environments work best with a gamma between 2.20 and 2.40. Although, many other values are possible.

What Is Display Gamma?

madVR Explained:

pure power curve

Uses the standard pure power gamma function.

BT.709/601 curve

Uses the inverse of a meant for camera gamma function. This can be helpful if your display has crushed shadows.

2.20

Brightens mid-range values, which can be nice in a brightly lit room.

2.40

Darkens mid-range values, which might look better in a darker room.

Recommended Use (color & gamma):

It is best to leave these options alone. Without knowing what you're doing, it is more likely you will degrade the image rather than improve it. brightness and contrast adjustments are only useful on the PC side if 16-235 video levels are not displaying correctly after manual adjustment of the display's controls. A better solution to this problem is to create a 3D LUT or use a colorimeter to manually adjust the display's detailed grayscale controls to correct deviations from the calibrated gamma curve.

HOW TO - Set up madVR for Kodi DSPlayer & External Players - Warner306 - 2016-02-08

1. DEVICES (Continued...)

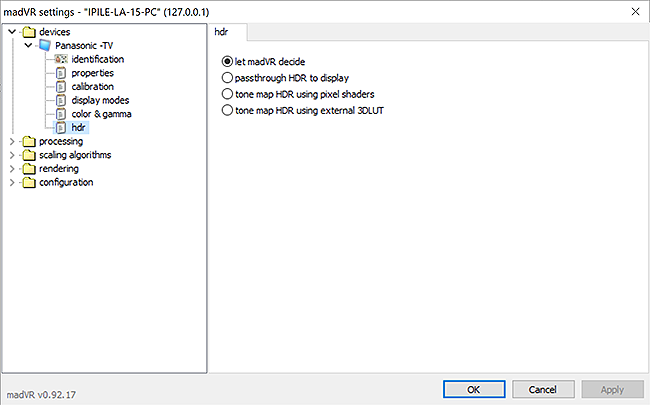

HDR

The hdr section specifies how HDR sources are handled. HDR refers to High Dynamic Range content. This is a new standard for consumer video that includes sources ranging from UHD Blu-ray to streaming services such as Netflix, Amazon, Hulu, iTunes and Vudu, as well as HDR TV broadcasts.

What Is HDR Video?

Current HDR support in madVR focuses on PQ HDR10 content. Other formats such as Hybrid Log Gamma (HLG), HDR10+ and Dolby Vision are not supported because current video filters and video drivers cannot passthrough these formats.

HDR sources are converted internally in the display through a combination of tone mapping, gamut mapping and transfer function conversion. madVR is capable of all of these tasks, so HDR video can be displayed accurately on any display type, and not just bright HDR TVs.

The three primary HDR options below provide various methods of compressing HDR sources to a lower peak brightness (known as tone mapping). Unlike SDR video, HDR10 videos are not mastered universally to match the specifications of all consumer displays and it is up to each display manufacturer to determine how to map the brightness levels of HDR video to its displays.

Each HDR setting adds incremental amounts of tone mapping and gamut mapping to the source video with varied levels of resource use. 3D LUT correction adds a small amount to GPU rendering times, but tone map HDR using pixel shaders with all HDR enhancements enabled can add considerably to rendering times, which may not make it a good option for mid to low-level GPUs when outputting at 3840 x 2160p (4K UHD).

What Is HDR Tone Mapping?

madVR offers four options for processing HDR10 sources:

let madVR decide

madVR detects the display's capabilities. Displays that are HDR-compatible receive HDR sources with metadata via passthrough (untouched). Not HDR-compatible? HDR is converted to SDR via pixel shader math at reasonable quality, but not the highest quality.

passthrough HDR to display

The display receives HDR RGB source values untouched for conversion by the display (a setting of let madVR decide will also accomplish this). HDR passthrough should only be selected for displays which natively support HDR playback. send HDR metadata to the display: Use Nvidia's or AMD's private APIs to passthrough HDR metadata: Requires a Nvidia or AMD GPU with recent drivers and a minimum of Windows 7. Use Windows 10 HDR API (D3D 11 only): For Intel users; requires Windows 10 and use Direct3D 11 for presentation (Windows 7 and newer). To use the Windows API, HDR and WCG must be enabled in Windows Display settings. This is important as the Windows API will not dynamically switch in and out of HDR mode. By comparison, the Nvidia & AMD APIs do dynamically switch between SDR and HDR when HDR videos are played, allowing for perfect HDR and SDR playback. The Windows 10 API is all or nothing — all HDR all the time. The Nvidia & AMD APIs for HDR metadata passthrough require Windows 10 HDR and WCG is deactivated. AMD also needs two additional settings: Direct3D 11 for presentation (Windows 7 and newer) in general settings and 10-bit output from madVR (GPU output may be 8-bit). You do not need to select 10-bit output for Nvidia GPUs; dithered 8-bit output is acceptable and sometimes preferable in some cases.

tone map HDR using pixel shaders

HDR is converted to SDR through combined tone mapping, gamut mapping and transfer function conversion. The display receives SDR content. output video in HDR format: The display receives HDR content, but the HDR source is tone mapped/downconverted to the target specs.

tone map HDR using external 3DLUT

The display receives HDR or SDR content with the 3D LUT downconverting the HDR source to some extent. The 3D LUT input is R'G'B' HDR (PQ). The output is either R'G'B' SDR (gamma) or R'G'B' HDR (PQ). The 3D LUT applies some tone and/or gamut mapping.

Recommended Use (hdr):

The first decision you need to make when choosing an hdr setting is whether you want to output HDR video as HDR or SDR. If it is a true HDR display such as an LED TV or OLED TV with at least 500 nits or more of peak luminance, you most likely want HDR output. These displays usually follow the PQ EOTF curve 1:1 linearly up to 100 nits because they have more than adequate brightness to do so and tend to only focus on tone mapping the specular highlights above 100 nits. Given 90% or more of the video levels in current HDR videos are mastered within the first 0-100 nits (known as PQ reference white or SDR white), the majority of HDR displays don’t have a lot of tone mapping to do. For these displays, selecting passthrough HDR to display or applying a small amount of tone mapping to the brightest source levels with tone map HDR using pixel shaders and output video in HDR format checked can be all that is required to get a great HDR image.

If the display has limited light output, such as a projector or entry-level HDR LED TV, you will likely get a better image by converting HDR to SDR by selecting tone map using pixel shaders and using the default output configuration. Why? It comes down to having a limited range of brightness to work with and a need to compress more of the HDR source range.

HDR converted to SDR does not involve any loss of the original HDR signal. HDR can be easily mapped to an SDR gamma curve with 1:1 PQ EOTF luminance tracking for any HDR sources that fit within the available peak nits of the display. However, for scenes that have nits levels mastered above the display peak nits, some tone mapping of the brightness levels to the display is required and the SDR gamma curve can often do a more convincing job of compressing the high dynamic range of PQ HDR videos to dimmer HDR displays than fixed linear PQ EOTF tracking with a roll-off curve.

HDR to SDR tone mapping tends to be most effective when the target display has no option but to tone map the shadows and midtones of HDR10 videos to accommodate the very bright HDR specular highlights of HDR videos within a limited range of contrast. The relative SDR gamma curve can be utilized to automatically resize HDR signals by rescaling the entire source levels to be brighter or darker to have more consistent contrast from scene-to-scene. This wholesale rescaling of the gamma curve is used by madVR to create precise control over the brightness and positioning of the shadows, midtones and highlights to produce higher Average Picture Levels (APLs) for the display with a good balance of contrast enhancement and brightness without overly clipping the HDR specular highlights. This eskews the traditional tone mapping for HDR flat panel TVs that most often leave the shadows and midtones largely untouched with a focus on tone mapping the specular highlights in isolation. Instead, HDR converted to SDR gamma acknowledges any deficiencies in available nits output by rebalancing the full source signal with the best compromise of brightness preservation versus local contrast enhancement.

This style of tone mapping that uses the full display curve may not be necessary for true HDR flat panel TVs that have the headroom to represent the specular highlights in HDR videos without having to compress the lower source levels. However, accurate tone mapping of the entire display curve becomes far more important when a display lacks the ability to display HDR specular highlights with proper brightness, such as HDR front projectors, and necessitates lowering the nits levels of the the shadows and midtones in order to fit in the HDR highlights.

When using an SDR picture mode, HDR levels of peak brightness are not always achievable, but most displays that would benefit from HDR to SDR tone mapping have similar brightness when playing HDR or SDR sources, so there isn’t any downside to using a shared display mode to accommodate both content types. Converting HDR sources to SDR gamma also offers a way for SDR display owners to enjoy HDR10 videos on older SDR displays that lack the ability to accurately tone map HDR content.

HDR 3D LUTs are created for HDR-compatible displays with a colorimeter and free display calibration software such as DisplayCal. 3D LUTs are static curves designed to apply static tone mapping roll-offs for specific source mastering peaks. A 3D LUT is not intended to be used to apply any form of dynamic HDR tone mapping or dynamic LUT correction.

If your display does a poor job with HDR sources or you want to experiment, try each of the HDR output options to find one that provides an HDR image that isn’t too dim or plagued by excessive specular highlight clipping.

Recommended hdr Setting by Display Type:

OLED (HDR) / High Brightness LED (HDR) (600+ nits):

passthrough HDR to display OR tone map using pixel shaders (HDR output).

Mid Brightness LED (HDR) (400-600 nits):

passthrough HDR to display OR tone map using pixel shaders (HDR output).

Low Brightness LED (HDR) (300-400 nits):

tone map using pixel shaders (SDR output) OR passthrough HDR to display.

Projector (HDR) (50-250 nits):

tone map using pixel shaders (SDR output) OR passthrough HDR to display.

Television (SDR) / Projector (SDR):

tone map using pixel shaders (SDR output).

Signs Your Display Has Correctly Switched into HDR Mode:

- An HDR icon typically appears in a corner of the screen;

- Backlight in the Picture menu will go up to its highest level;

- Display information should show a BT.2020 PQ SMPTE 2084 input signal;

- The first line of the madVR OSD will indicate NV HDR or AMD HDR.

*A faulty video driver can prevent the display from correctly entering HDR mode. If this is the case, it is recommended to roll back to an older, working driver.

List of Video Drivers that Support HDR Passthrough

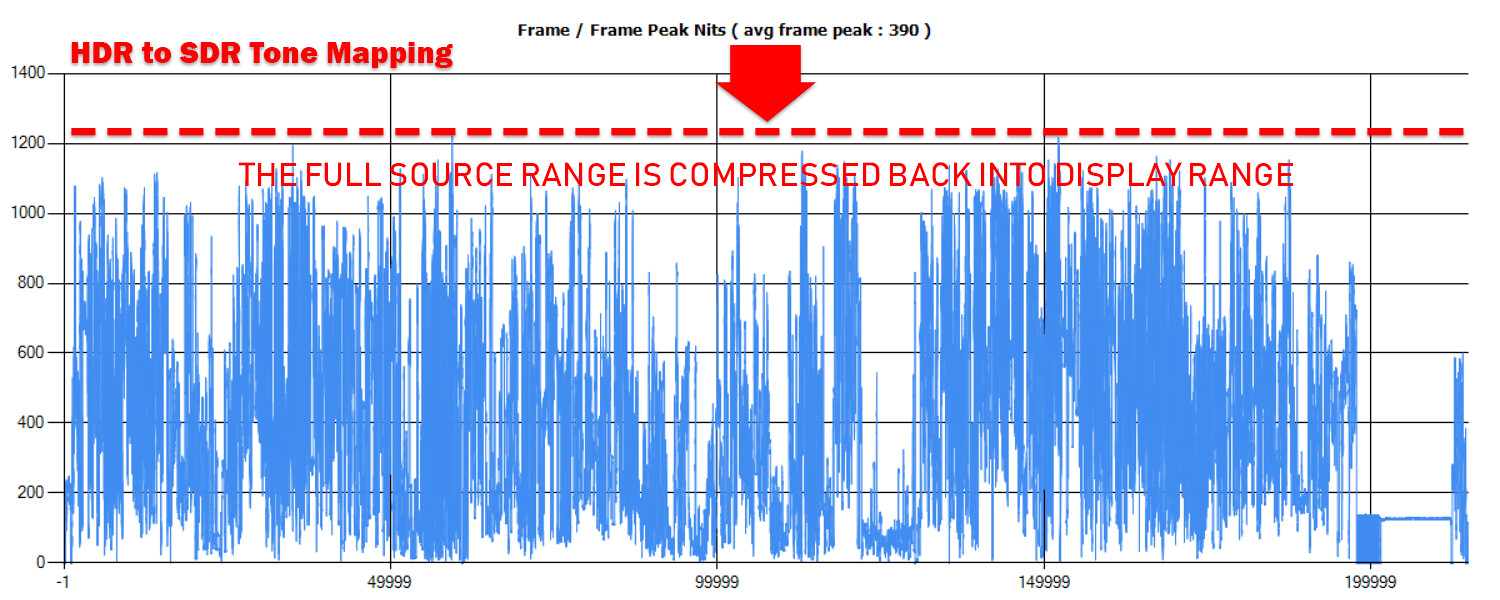

tone map HDR using pixel shaders

Pixel shader tone mapping is madVR’s video-shader based tone mapping algorithm. This applies both tone mapping and gamut mapping to HDR sources to compress them to the target peak nits entered in madVR. The output from pixel shaders is either HDR converted to SDR gamma or HDR PQ sent with altered metadata that reports the source peak brightness and primaries after tone mapping.

Pixels shaders does not rely on static HDR10 metadata. All tone mapping is done dynamically per detected movie scene by using real-time, frame-by-frame measurements of the peak brightness and frame average light level of each frame in the video.

What Is HDR to SDR Tone Mapping?

What Is Gamut Mapping?

What Is the Difference Between Static & Dynamic Tone Mapping?

Pixel Shaders HDR Output Formats:

Default: SDR Gamma

The default pixel shaders output converts HDR PQ to SDR gamma (2.20, 2.40, etc.). madVR redistributes PQ values along the SDR gamma curve with necessary dithering to mimic the response of a PQ EOTF. This is HDR converted at the source side rather than the display side to replace a display's HDR picture mode.

Best Usage Cases:

HDR Projectors, SDR Projectors, Low Brightness LED HDR TVs, SDR TVs.

HDR to SDR: Advantages and Disadvantages

output video in HDR format: PQ EOTF

Checking output video in HDR format outputs in the original PQ EOTF. madVR's tone mapping is applied and the HDR metadata is altered to reflect the lowered RGB values after mapping. So the display receives the mapped RGB values along with the correct metadata to trigger its HDR mode. madVR does some pre-tone mapping for the display:

PQ (source) -> PQ EETF (Electrical-Electrical Transfer Function: PQ values rescaled by madVR) -> PQ EOTF (display)

Best Usage Cases:

OLED HDR TVs, Mid-High Brightness LED HDR TVs.

Doing some tone mapping for the display is useful for an HDR standard that relies on a single value for peak luminance. Most displays will gamble that frame peaks near the source peak will be infrequent and choose to maximize the display’s available brightness by using a roll-off that prioritizes brightness over specular highlight detail. This usually results in some clipping of very bright highlight detail in some scenes. Other displays will assume much of the image is well above the display’s peak brightness and use a harsh tone curve that makes many scenes unnecessarily dark.

Pixel shaders with HDR output compresses any specular highlights that are above the target peak nits entered in madVR. These highlights are tone mapped back into the display range to present all sources with the same compressed source peak. This benefits the display by keeping all specular highlight detail within the display range without clipping and prevents the display from choosing a harsh tone curve for titles with high MaxCLLs, such as 4,000 nits or 10,000 nits, by reporting a lower source peak to the display. Compression is applied dynamically, so only highlights that have levels above the specified display peak nits are compressed back into range and the rest of the image remains the same as HDR passthrough. The ability to compress HDR highlights too bright for the display is very similar to the HDR Optimiser found on the Panasonic UB820/UB9000 Blu-ray players.

Rescaling of the source values and HDR metadata does not always work well with all displays. Most HDR displays will apply some additional compression to the input values with its internal display curve, which can sometimes lead to some clipping of the highlights or distorted colors due to the effect of the double tone map. Pixel shaders also cannot correct displays that do not follow the PQ curve, like those that artificially boost the brightness of HDR content or those with poor EOTF tracking that crush shadow detail.

Pixel shaders with HDR output checked is only recommended if it works in conjunction with your display's own internal tone mapping, largely based on how it handles the altered static metadata provided by madVR (lowered MaxCLL and mastering display peak luminance). Displays with a dynamic tone mapping setting don’t usually use the static metadata and should ignore the metadata in favor of simply reading the RGB values sent by madVR. Some experimentation with the target peak nits and movie scenes with many bright specular highlights can be necessary to determine the usefulness of this setting. The brightest titles mastered above 1,000 nits tend to be the best usage case for this tone mapping. A good way to test the impact of the source rescaling is to create two hdr profiles mapped to keyboard shortcuts in madVR that can be toggled during playback: one set to passthrough HDR to display and the other pixel shaders with HDR output.

HDR to HDR: Advantages and Disadvantages

*Incorrect metadata can be sent by some Nvidia drivers when madVR is set to passthrough HDR content. If a display uses this metadata to select a tone curve, incorrect metadata may result in some displays selecting the wrong tone curve for the source. There are many driver versions known to both passthrough HDR content correctly and provide an accurate MaxCLL, MaxFALL and mastering display maximum luminance to the display.

List of Nvidia Video Drivers that Support Correct HDR Metadata Passthrough

tone map HDR using external 3DLUT

The best method to reliably defeat the display's tone mapping is to use the option tone map HDR using external 3DLUT and create a 3D LUT with display calibration software, which will trigger the display's HDR mode and apply a tone curve and adjust its color balance by using corrections provided by the 3D LUT table.

HDR 3D LUTs are static tables and may be created in several configurations to replace the selection of static HDR curves used by the display: such as 500 nits, 1,000 nits, 1,500 nits, 4,000 nits and 10,000 nits. HDR 3D LUT curve selection is automated with HDR profile rules referencing hdrVideoPeak.

Example of image tone mapped by madVR

2,788 nits BT.2020 -> 150 nits BT.709 (480 target nits):

Settings: tone map HDR using pixel shaders

Preview of Functionality of the Next Official madVR Build and Current AVS Forum Test Builds:

HDR -> SDR: Resources Toolkit

What Is Dynamic Clipping?

What Is a Dynamic Target Nits?

Instructions: Using madMeasureHDR to create dynamic HDR10 metadata

target peak nits [200]

target peak nits is the target display brightness for tone mapping in PQ nits. Enter the estimated actual peak nits of the display. If you own a colorimeter, the easiest way to measure peak luminance is to open a 100% white pattern with HCFR and read the value for Y. If you are outputting in an HDR format, the standard method to measure HDR peak luminance is to measure Y with a 10% white window in HDR mode. A TV's peak luminance can be estimated by multiplying the known peak brightness of the display times the chosen backlight setting (e.g., 300 peak nits x 11/20 backlight setting = 165 real display nits).

Recommendation for Estimating Peak Nits for a Projector (via a Light Meter)

target peak nits doesn't need to correlate to the actual peak peak brightness of the display when set to SDR output. SDR brightness works similar to a dynamic range slider: Increasing the display target nits above the actual peak nits of the display increases HDR contrast (makes the image darker) and lowering it decreases HDR contrast (makes the image brighter).

HDR output uses fixed luminance (the target brightness is not rescaled by the display) and decreasing the target peak nits below the actual display peak nits should make the image increasingly darker as the source peak is compressed to a brightness that is lower than the display peak.

With output video in HDR format checked, only scenes above the entered target peak nits have tone mapping applied. If set to 700 nits, for example, the majority of current HDR content would be output 1:1 and only the brightest scenes would need tone mapping (you can reference the peak brightness of any scene in the madVR OSD). Most HDR displays attempt to retain specular highlight detail up to 1,000 nits, but can often benefit from some assistance in preserving brighter highlights in titles with higher MaxCLLs of 4,000 nits - 10,000 nits.

High Processing

tone mapping curve [BT.2390]

BT.2390 is the default curve. The entire source range is compressed to match the set target peak nits.

A tone mapping curve is necessary because clipping the brightest information would cause the image to flatten and lose detail wherever pixels exceed the display capabilities. Tone mapping applies an S-shaped curve to create different amounts of compression to pixels of different luminances. The strongest compression is applied to the highlights while adjusting other pixels relative to each other to retain similar contrast between bright and dark detail relative to the original image.

clipping is automatically substituted if the content peak brightness is below the target peak nits.

Report BT.2390 comes from the International Telecommunications Union (ITU).

clipping

No tone mapping curve is applied. All pixels higher than the set target nits are clipped and those lower are preserved 1:1. Pixels that clip will turn white. Obviously, this is not recommended if you want to preserve specular highlight detail.

arve custom curve

With the aid of Arve's Custom Gamma Tool, it is possible to create custom PQ curves that are converted to 2.20, 2.40, BT.1886 or PQ EOTFs. The Arve Tool is designed to work with a JVC projector via a network connection, but it may be possible to manually adjust the curve without direct access to the display by changing the curve parameters in Python and saving the output for madVR. Be prepared to do some reading as this tool is complicated.

Instructions:

- Install Python 3.6 or later;

- Download Arve Custom Tool for madVR;

- Open menu.py with Python;

- Follow the instructions here;

- Skip to ga (Adjust gamma curve) if you don't own a JVC projector;

- Documentation;

- Speed guide;

- Explanation of gamma curve parameters.

Recommended Use (tone mapping curve):

The tone mapping curve can be left at its default value of BT.2390. Most tone mapping in madVR is optimized for this curve and arve custom curve's don't support frame measurements. clipping is only available for comparison or test purposes.

color tweaks for fire & explosions [balanced]

Fire is mostly comprised of a mixture of red, orange and yellow hues. After tone mapping and gamut mapping are applied, yellow shifts towards white due to the effects of tone mapping, which can cause fire and explosions to appear overly red. To correct this, madVR shifts bright red/orange pixels towards yellow to put a little yellow back into the flames and make fire appear more impactful. All bright red/orange pixels are impacted by this hue shift, so it is possible this is not desirable in every scene, but the color shift is slight and not always noticeable.

high strength

Bright red/orange out-of-gamut pixels are shifted towards yellow by 55.55% when gamut mapping is applied to compensate for the loss of yellow hues in fire and flames caused by tone mapping. This is meant to improve the impact of fire and explosions directly, but will have an effect on all bright red/orange pixels.

balanced [Default]

Bright red/orange out-of-gamut pixels are shifted towards yellow by 33.33% (and only the brightest pixels) when gamut mapping is applied to compensate for the loss of yellow hues in fire and flames caused by tone mapping. This is meant to improve the impact of fire and explosions directly, but will have an effect on all bright red/orange pixels.

disabled

All out-of-gamut pixels retain the same hue as the tone mapped result when moved in-gamut.

Mad Max Fury Road:

color tweaks for fire & explosions: disabled

color tweaks for fire & explosions: balanced

color tweaks for fire & explosions: high

Mad Max Fury Road (unwanted hue shift):

color tweaks for fire & explosions: disabled

color tweaks for fire & explosions: balanced

color tweaks for fire & explosions: high

Recommended Use (color tweaks for fire & explosions):

Most would be better off by disabling color tweaks for fire & explosions. The reason being that bright reds and oranges in movies are more commonly seen in scenes that don’t include any fire or explosions. So, on average, you will have more accurate hues by not shifting bright reds and oranges towards yellow to improve a few specific scenes at the expense of all other scenes in the video. These color tweaks are best reserved for those who place a high premium on having “pretty fire.”

High - Maximum Processing

highlight recovery strength [none]

Detail in compressed image areas can become slightly smeared due to a loss of visible luminance steps. When adjacent pixels with large luminance steps become the same luminance or the difference between those steps is drastically reduced (e.g. a difference of 5 steps becomes a difference of 2 steps), a loss of texture detail is created. This is corrected by simply adding back some detail lost in the luminance channel. The effect is similar to applying image sharpening to certain frequencies with the potential to give the image an unwanted sharpened appearance at higher strengths.

Available detail recovery strengths range from low to are you nuts!?. Higher strengths could be more desirable at lower target peak nits where compressed portions of the image can appear increasingly flat. Expect a significant performance hit; only the fastest GPUs should be enabling it with 4K 60 fps content.

none [Default]

highlight recovery strength is disabled.

low - are you nuts!?

Recovered frequency width varies from 3.25 to 22.0. GPU resource use remains the same with all strengths.

Batman v Superman:

highlight recovery strength: none

highlight recovery strength: medium

Recommended Use (highlight recovery strength):

The lone reason not to enable highlight recover strength would be for performance reasons. It is very resource-intensive. Otherwise, this setting adds a lot of detail and sharpness to compressed highlights, particularly on displays with a low peak brightness. I would recommend starting with a base value of medium, which does not oversharpen the highlights and leaves room for those who want even higher strengths with even more detail recovery. highlight recovery strength performs considerably faster when paired with D3D11 Native hardware decoding in LAV Video compared to DXVA2 (copy-back). This is due to D3D11 Native's better optimization for DX11 DirectCompute used by this shader.

Low Processing

measure each frame's peak luminance [Checked]

Overcomes the limitation of HDR10 metadata, which provides a single value for peak luminance but no per scene or per frame dynamic metadata. madVR can measure the brightness of each pixel in each frame and provide a rolling average, as reported in the OSD. The brightness range of an HDR video will vary during each scene. By measuring the peak luminance of each pixel, madVR will adjust the tone mapping curve subtlety throughout the video to provide optimized highlight detail. This is like having HDR10+ metadata available to provide more dynamic tone mapping for future releases.

Recommended Use (measure each frame's peak luminance):

The performance cost of frame measurements is very low, so it is worth enabling them to add some specular highlight detail and provide a small boost in brightness for some scenes.

Note: The checkbox compromise on tone & gamut mapping accuracy under trade quality for performance is checked by default. Gamut mapping is applied without hue and saturation correction when this is enabled. Unless you have limited processing resources available, you'll want to uncheck this to get the full benefit of tone mapping.

HDR -> SDR: The following should also be selected in devices -> calibration -> this display is already calibrated:

- primaries / gamut (BT.709, DCI-P3 or BT.2020)

- transfer function / gamma (pure power curve 2.xx)

If no calibration profile is selected (by ticking disable calibration controls for this display), madVR maps all HDR content to BT.709 and pure power curve 2.20.

tone map HDR using pixel shaders set to SDR output will use any matching 3D LUTs attached in calibration. HDR is converted to SDR and the 3D LUT is left to process the SDR output as it would any other video.

Other video filters required for HDR playback:

- LAV Filters 0.68+: To passthrough the HDR metadata to madVR.

ShowHdrMode: To add additional HDR info to the madVR OSD including the active HDR mode selected and detailed HDR10 metadata read from the source video, create a blank folder named "ShowHdrMode" and place it in the madVR installation folder.

HDR10 Metadata Explained

HDR Demos from YouTube with MPC-BE

Image Comparison: SDR Blu-ray vs. HDR Blu-ray at 100 nits on a JVC DLA-X30 by Vladimir Yashayev

Official HDR Tone Mapping Development Thread at AVS Forum

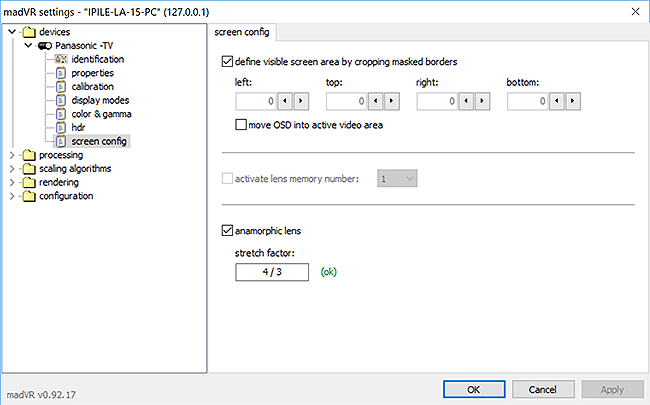

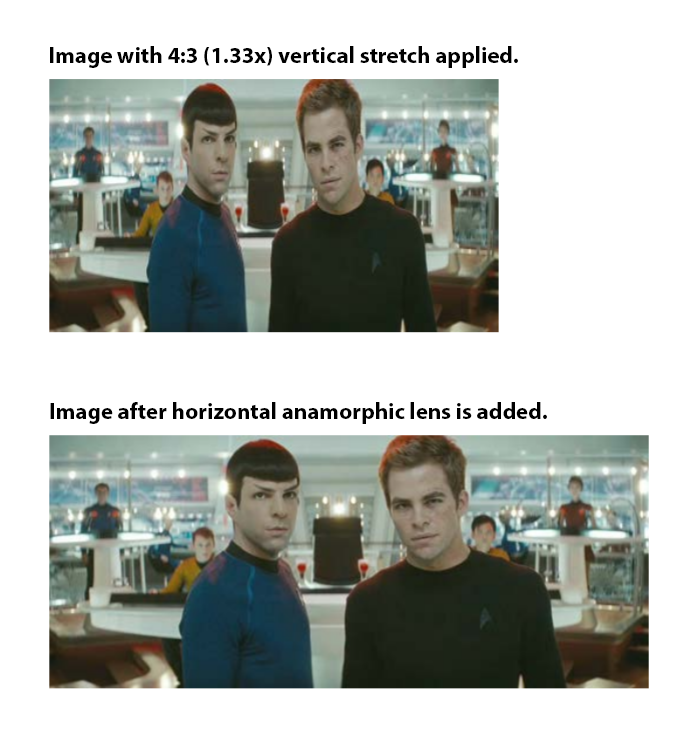

Screen Config

The screen config section options can be used to apply screen masking to the player window or an anamorphic stretch to crop portions of the screen area to dimensions that match CinemaScope (scope) projector screens. This screen configuration is used alongside zoom control (under processing) to enforce a reduced target window size for rendering all video. The device type must be set to Digital Projector for this option to appear.

Those who output to a standard 16:9 display without screen masking shouldn't need to adjust these settings. screen config is designed more for users of Constant Image Height (CIH), Constant Image Width (CIW) or Constant Image Area (CIA) projection that use screen masking to hide or crop portions of the image.

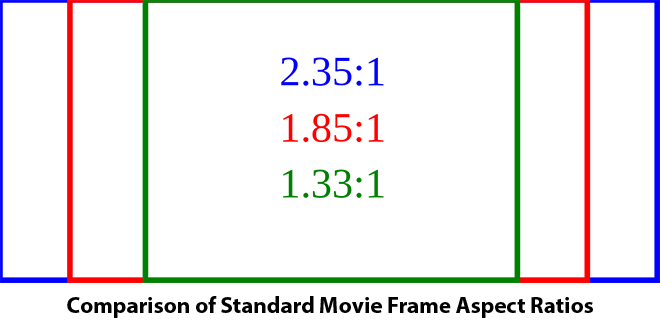

A media player will always send a 16:9 image that will fill a 16:9 screen if the source video happens to be 16:9. However, many video formats are mastered in wider aspect ratios known as CinemaScope with common ratios of 2.35:1, 2.39:1 and 2.40:1 that are too wide for the default 16:9 window size. Normally, black bars are added to the top and bottom of CinemaScope videos to rescale them to a fit the 16:9 window. To get rid these black bars, some projector owners use a zoom lens to make CinemaScope videos larger and wider and project them onto wider 2.35:1 - 2.40:1 ratio CinemaScope screens.

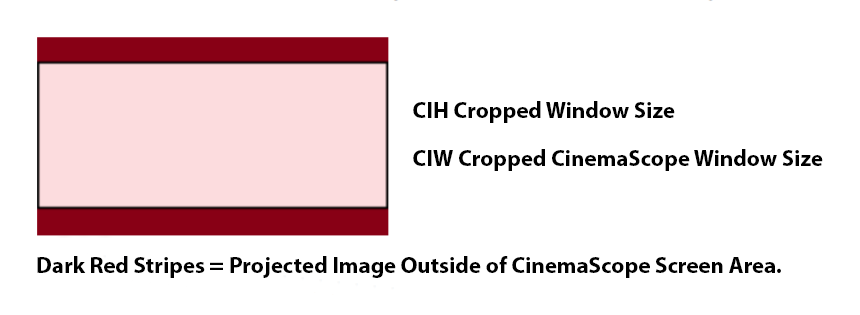

If a 16:9 image is projected onto a CinemaScope projector screen with a zoom setting designed for CinemaScope, the image overshoots the top and bottom of the screen like this:

This screen overshoot is managed by using a projector lens memory that disables the zoom lens when 16:9 videos are played. Then 16:9 videos are zoomed to a smaller size to fit the height of the screen with some vacant space left on both sides. Zoom settings for 21:9 and 16:9 content are stored as separate lens memories in the projector. However, in some cases, it is possible for video content to overshoot the 21:9 projector zoom setting if the source switches at any point from a 21:9 to 16:9 aspect ratio during playback, such as the 1.78:1 IMAX sequences in The Dark Knight Trilogy. Two-way screen masking is often used to frame the top and bottom of the screen to ensure no visible content spills outside the screen area during these sequences.

madVR's solution for framing CinemaScope screens is to define a screen rectangle for the media player that maintains the correct aspect ratio at all times. If any content is to spill outside the defined screen space, it is automatically cropped or resized to fit the player window. This ensures the full screen area is used regardless of the source aspect ratio without having to worry about any video content either being projected outside the screen area or any black bars being left along the inside edges.

screen config and its companion zoom control (which is discussed later) are compatible with all forms of Constant Image Height (CIH), Constant Image Width (CIW) and Constant Image Area (CIA) projection.

What Is Constant Image Height (CIH), Constant Image Width (CIW) and Constant Image Area (CIA) Projection?

define visible screen area by cropping masked borders

The defined screen area is intended to simulate screen masking used to frame widescreen projector screens by placing black pixels on the borders of the media player window and rescaling the image to a lower resolution.

madVR will maintain this screen framing when cropping black bars and resizing the image using zoom control. Only active when fullscreen.

Screen masking is used to create a solid, black rectangle around edges of the screen space that frames the screen for greater immersion and keeps all video contained within the screen area.

This masking is applied to create aspect ratios that match most standard video content:

Current consumer video sources are distributed exclusively in a 16:9 aspect ratio intended for 16:9 screens. The width of consumer video is always the same (1920 or 3840) and only the height is rescaled to fit aspect ratios wider than 16:9. The fixed width of consumer video means screen masking should only be needed at the top and bottom of the player window to remove the black bars. When the top and bottom cropping match the target screen aspect ratio, the cropped screen area should provide the precise pixel height to fill the projector panel so that zoomed CinemaScope videos fit both the exact height AND width of the scope screen.

The pixel dimensions of any CinemaScope screen are determined by the amount of cropping created by the projector zoom. When zoomed, the visible portions of standard 16:9 sources fill the full screen space with the source's black bars overshooting the top and bottom of the screen. This creates a cropped 21:9 image.

Original Source Size: The pixel dimensions of the source rectangle output from madVR to the display. Sources are always output as 1920 x 1080p or 3840 x 2160p, often with black bars included in the video.

Projected Image Size: The pixel dimensions of the image when projected onto the projector screen. The size of the projected image is controlled by the lens controls of the projector, which sets the zoom, focus and, in some cases, lens shift of the projected image. An anamorphic lens and anamorphic stretch are sometimes used in place of a projector zoom lens to rescale the image to a larger size.

Native projector resolutions are:

- 1920 x 1080p (HD);

- 3840 x 2160p (4K UHD);

- 4096 x 2160p (DCI 4K).

Projector screens are available in several aspect ratios, including:

- 2.35:1;

- 2.37:1;

- 2.39:1;

- 2.40:1; and,

- Other non-standard aspect ratios.

When projecting images onto these screens, the projected resolution matches the size of the cropped screen area: 2.35:1 = 1920 x 817 to 4096 x 1743 and 2.40:1 = 1920 x 800 to 4096 x 1707.

Cropped Size of Standard Movie Content:

- 1.33:1: 1920 x 1440 -> 3840 x 2880

- 1.78:1: 1920 x 1080 -> 3840 x 2160

- 1.85:1: 1920 x 1038 -> 3840 x 2076

- 2.35:1: 1920 x 817 -> 3840 x 1634

- 2.39:1: 1920 x 803 -> 3840 x 1607

- 2.40:1: 1920 x 800 -> 3840 x 1600

Aspect Ratio Cheat Sheet

As the media player will always output a 1.78:1 image by default (1920 x 1080p or 3840 x 2160p), new screen dimensions are only necessary for the other aspect ratios: 1.85:1, 1.33:1, 2.35:1, 2.39:1 and 2.40:1.

CIH, CIW or CIA projection typically separates all aspect ratios into two screen configurations with two saved lens memories:

One Standard 16:9 (1.78:1, 1.85:1, 1.33:1) screen configuration that uses the default 16:9 window size and,

A CinemaScope 21:9 (2.35:1, 2.39:1, 2.40:1) screen configuration that matches the zoomed or masked screen area suitable for wider CinemaScope videos.

Any rescaling or cropping that happens within these player windows is controlled by the settings in zoom control.

Screen Profile #1 - CinemaScope (2.35:1 - 2.40:1) (21:9)

Screen Sizes: 1.78:1, 2.05:1, 2.35:1, 2.37:1, 2.39:1, 2.40:1

The height of the screen area is cropped based on a combination of the GPU output resolution and the aspect ratio of the projector screen.

Fixed CIH projection without a zoom lens would use the same 2.35:1, 2.37:1, 2.39:1 or 2.40:1 screen dimensions for 21:9 and 16:9 sources. When a 16:9 source is played, image downscaling is activated to shrink 16:9 videos to match the height of 21:9 videos.

Zoom-based CIH, CIW and CIA projection needs a second screen configuration that switches to the default 16:9 rectangle for 16:9 content (disables the zoomed or masked screen dimensions). It is also possible to have madVR activate a lens memory on the projector to match the 16:9 or 21:9 screen profile.

To frame a CinemaScope screen, crop the top and bottom of the player window until the window size matches the exact height of the projector screen up to its borders. For example, a 2.35:1 screen would need a crop of approximately 131 - 417 pixels from the top and bottom of the player window.

2.35:1 Screens (CinemaScope Masked):

1920 x 1080 (GPU) -> 1920 x 817 (cropped)

3840 x 2160 (GPU) -> 3840 x 1634 (cropped)

4096 x 2160 (GPU) -> 4096 x 1743 (cropped)

2.37:1 Screens (CinemaScope Masked):

1920 x 1080 (GPU) -> 1920 x 810 (cropped)

3840 x 2160 (GPU) -> 3840 x 1620 (cropped)