2022-04-09, 22:47

2022-04-10, 10:07

I'm not surprised at all. And yes, you're right, the Shield TV can't properly play UHD 10-bit h.264 4:2:2 files - very few, if any, consumer players can using hardware decode.

4:2:2 isn't a consumer colour space format. Very few, if any, player solutions at a consumer price point will support it. Consumer players are based around the consumer 4:2:0 format used by DVD, consumer DVB-T/T2/S/S2/C, ATSC 1.0 and 3.0, HD Blu-ray and UHD Blu-ray (as well as all the streaming services like Netflix, Prime, Disney+ and Apple TV+). There are no consumer video sources that are 4:2:2 - other than some consumer cameras that shoot 4:2:2 for post-production.

The hardware acceleration implemented in ARM (and PC) VPU and GPU solutions that decode h.264 video don't usually have support for 4:2:2 h.264, and are usually also limited to 8-bit. This means rather than hardware accelerated decoding, most players will have to fall back to CPU decoding 4:2:2 and/or 10-bit h.264 - and as you can see, CPUs like the one used in nVidia Shield TV, simply don't have the processing power to support UHD 4:2:2 h.264 software decode.

Most consumer decoders for h.265/HEVC DO support 10-bit decode (as it's required for HDR, but that doesn't mean it's only supported in HDR) - but most won't support 4:2:2, only 4:2:0.

The Apple TV 4K is unusual in that is DOES support HEVC/h.265 hardware acceleration at 4:2:2 and 4:4:4 (as well as 4:2:0) and 10-bit - because that codec is used with those colour spaces by Apple for Airplay Screen Mirroring (to avoid colour smearing on fine desktop detail) - and also the ATV 4K can software decode 1080p h.264 4:2:2. I haven't tested 4:2:2 UHD h.264 as I wouldn't use h.264 4:2:2 for UHD.

h.265/HEVC is recommended for UHD over h.264 as it's more efficient, delivering higher quality at lower bitrates, and has 10-bit support on consumer players, not just 8-bit (as with h.264). h.264/AVC is really only used for 8-bit HD these days as a final player format.

There's a big difference between shooting UHD in a 'camera codec' for post-production - where 4:2:2 h.264 10-bit (probably called X-AVC or similar?) is a supported camera codec format by NLEs like Prem Pro/Resolve/Avid etc. - and mastering a final playable edited file for consumer devices, where 4:2:0 h.265/HEVC is far more standard)

To play on your nVidia Shield TV I'd export your video edits in 4:2:0 h265/HEVC 10-bit.

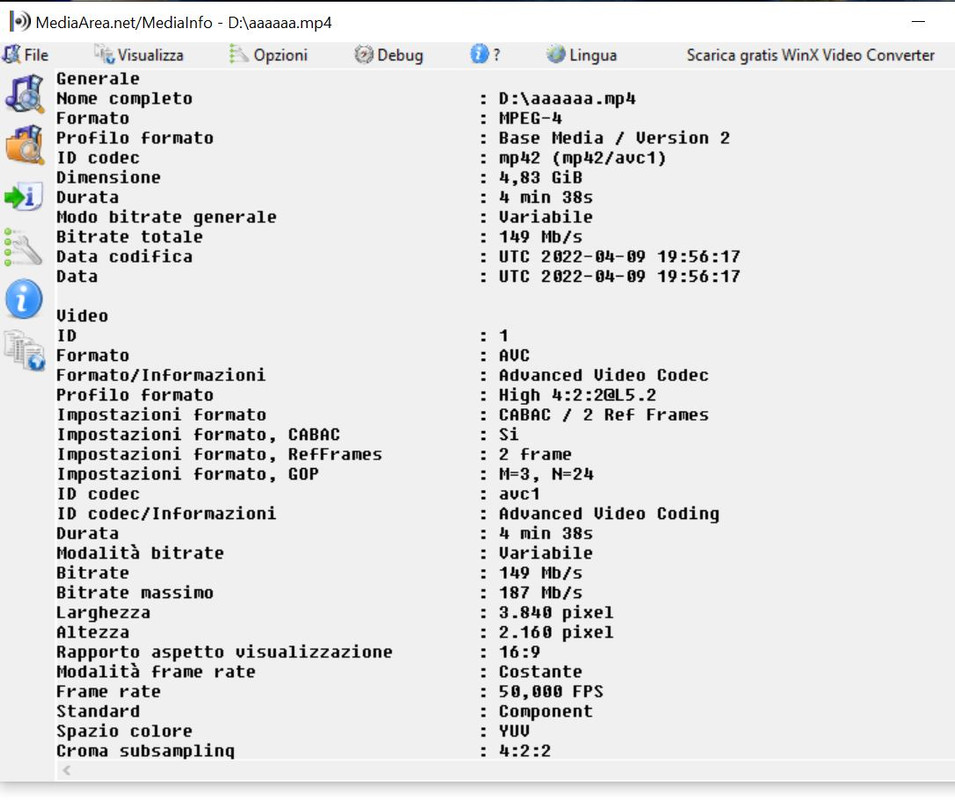

NB - that file also has a bit rate of ~150Mbs - that too will push your Shield TV hard. For a final edited master, I doubt you'll seee huge benefits of >50Mbs with h.265/HEVC 4:2:0 at 2160p50 (and probably will be fine with a bit lower than 50Mbs if you do trial exports at various bitrates). I usually chose constant quality rather than constant bitrate for my 4:2:0 h265 encodes - but I use Intel QuickSync h.265 encoding on an Intel Windows machine using ffmpeg for my 'consumer playable' exports usually.

4:2:2 isn't a consumer colour space format. Very few, if any, player solutions at a consumer price point will support it. Consumer players are based around the consumer 4:2:0 format used by DVD, consumer DVB-T/T2/S/S2/C, ATSC 1.0 and 3.0, HD Blu-ray and UHD Blu-ray (as well as all the streaming services like Netflix, Prime, Disney+ and Apple TV+). There are no consumer video sources that are 4:2:2 - other than some consumer cameras that shoot 4:2:2 for post-production.

The hardware acceleration implemented in ARM (and PC) VPU and GPU solutions that decode h.264 video don't usually have support for 4:2:2 h.264, and are usually also limited to 8-bit. This means rather than hardware accelerated decoding, most players will have to fall back to CPU decoding 4:2:2 and/or 10-bit h.264 - and as you can see, CPUs like the one used in nVidia Shield TV, simply don't have the processing power to support UHD 4:2:2 h.264 software decode.

Most consumer decoders for h.265/HEVC DO support 10-bit decode (as it's required for HDR, but that doesn't mean it's only supported in HDR) - but most won't support 4:2:2, only 4:2:0.

The Apple TV 4K is unusual in that is DOES support HEVC/h.265 hardware acceleration at 4:2:2 and 4:4:4 (as well as 4:2:0) and 10-bit - because that codec is used with those colour spaces by Apple for Airplay Screen Mirroring (to avoid colour smearing on fine desktop detail) - and also the ATV 4K can software decode 1080p h.264 4:2:2. I haven't tested 4:2:2 UHD h.264 as I wouldn't use h.264 4:2:2 for UHD.

h.265/HEVC is recommended for UHD over h.264 as it's more efficient, delivering higher quality at lower bitrates, and has 10-bit support on consumer players, not just 8-bit (as with h.264). h.264/AVC is really only used for 8-bit HD these days as a final player format.

There's a big difference between shooting UHD in a 'camera codec' for post-production - where 4:2:2 h.264 10-bit (probably called X-AVC or similar?) is a supported camera codec format by NLEs like Prem Pro/Resolve/Avid etc. - and mastering a final playable edited file for consumer devices, where 4:2:0 h.265/HEVC is far more standard)

To play on your nVidia Shield TV I'd export your video edits in 4:2:0 h265/HEVC 10-bit.

NB - that file also has a bit rate of ~150Mbs - that too will push your Shield TV hard. For a final edited master, I doubt you'll seee huge benefits of >50Mbs with h.265/HEVC 4:2:0 at 2160p50 (and probably will be fine with a bit lower than 50Mbs if you do trial exports at various bitrates). I usually chose constant quality rather than constant bitrate for my 4:2:0 h265 encodes - but I use Intel QuickSync h.265 encoding on an Intel Windows machine using ffmpeg for my 'consumer playable' exports usually.

2022-04-10, 10:29

THANK YOU for and valuable information.

Of of curiosity: if I shoot UHD 4:2:2 with my camera and then I make video editing with those 4:2:2 files and then I export 2 HEVC files: a file is an UHD 4:2:2 and the other one is an UHD 4:2:0, which of both is better to watch?

I thank you again.

Of of curiosity: if I shoot UHD 4:2:2 with my camera and then I make video editing with those 4:2:2 files and then I export 2 HEVC files: a file is an UHD 4:2:2 and the other one is an UHD 4:2:0, which of both is better to watch?

I thank you again.

2022-04-11, 13:32

(2022-04-10, 10:29)FoxADRIANO Wrote: THANK YOU for and valuable information.

Of of curiosity: if I shoot UHD 4:2:2 with my camera and then I make video editing with those 4:2:2 files and then I export 2 HEVC files: a file is an UHD 4:2:2 and the other one is an UHD 4:2:0, which of both is better to watch?

I thank you again.

Apart from an Apple TV 4K you won't be able to play the 4:2:2 h.265/HEVC file on a consumer player AIUI. (AIUI the ATV 4K is unusual in supporting subsampling other than 4:2:0)

4:2:0 is lower vertical chroma resolution than 4:2:2 - but 4:2:0 has equal horizontal and vertical chroma resolution, whereas 4:2:2 has twice the vertical chroma resolution as horizontal. You MAY see a small quality difference on some content when you look very closely. 4:2:2 makes sense in production where you may need the benefit of additional vertical chroma resolution for chroma-key, re-sizing a shot, or where you concatenate codecs and want to avoid 4:2:2<->4:2:0 conversion which can cause problems if you do it multiple times in production etc.

However every consumer video codec is based around 4:2:0 - I would be very, very surprised if you saw the difference between 4:2:2 and 4:2:0 final viewing copies on normal video content at normal viewing distances.

If you want to play your content on most UHD compatible player solutions I'd use UHD h.265/HEVC 10-bit 4:2:0 as my output codec. If you have a 10-bit SDR workflow then 10-bit SDR playback will be significantly higher quality than 8-bit (less banding on things like blue skies etc.)

(For universal playback - HD 4:2:0 h.264/AVC 8-bit is the most widespread codec playable on almost every HD player platform, but for UHD 4:2:0 h.265/HEVC 10-bit should be widely supported - both for SDR and HDR dynamic ranges)