6. SAMPLE SETTINGS PROFILES & PROFILE RULES

Note: Feel free to customize the settings within the limits of your graphics card. If color is your issue, consider buying a colorimeter and calibrating your display with a 3D LUT.

The settings posted represent my personal preferences. You may disagree, so don't assume these are the "best madVR settings" available. Some may want to use more shaders to create a sharper image, and others may use more artifact removal. Everyone has their own preference as to what looks good. When it comes to processing the image, the suggested settings are meant to err on the conservative side.

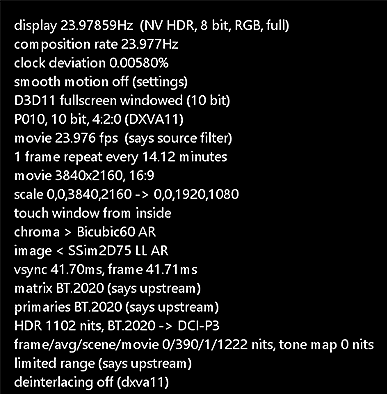

Summary of the rendering process:

Source

Note: The settings recommendations are separated by output resolution: 1080p or 4K UHD. The 1080p settings are presented first and 4K UHD afterwards.

Source

Note: The settings recommendations are separated by output resolution: 1080p or 4K UHD. The 1080p settings are presented first and 4K UHD afterwards.

So, with all of the settings laid out, let's move on to some settings profiles...

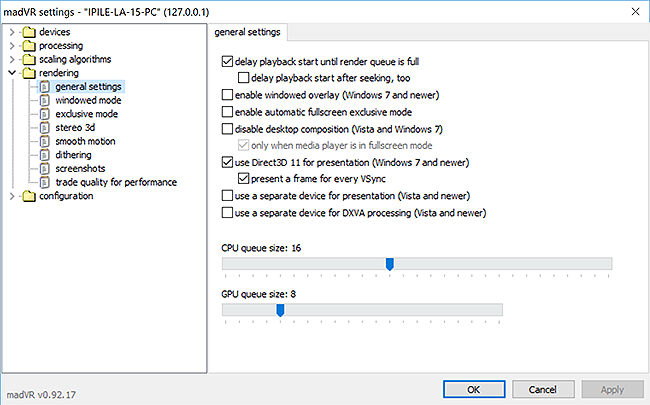

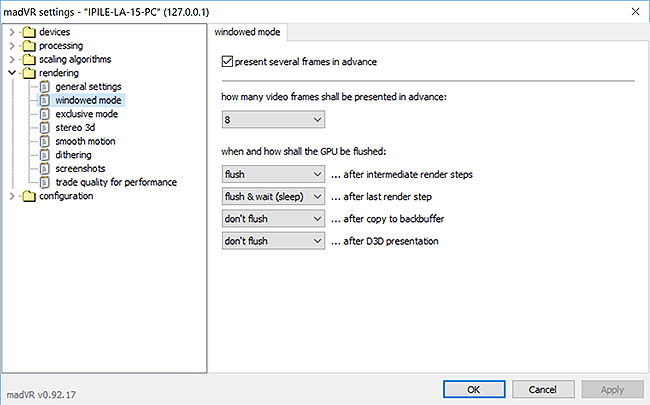

It is important to know your graphics card when using madVR, as the program relies heavily on this hardware. Due to the large performance variability in graphics cards and the breadth of possible madVR configurations, it can be difficult to recommend settings for specific GPUs. However, I’ll attempt to provide a starting pointing for settings by using some examples with my personal hardware. The example below demonstrates the difference in madVR performance between an integrated graphics card and a dedicated gaming GPU.

I own a laptop with an

Intel HD 3000 graphics processor and Sandy Bridge i7. madVR runs with settings similar to its defaults:

Integrated GPU 1080p:- Chroma: Bicubic60 + AR

- Downscaling: Bicubic150 + LL + AR

- Image upscaling: Lanczos3 + AR

- Image doubling: Off

- Upscaling refinement: Off

- Artifact removal - Debanding: Off

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

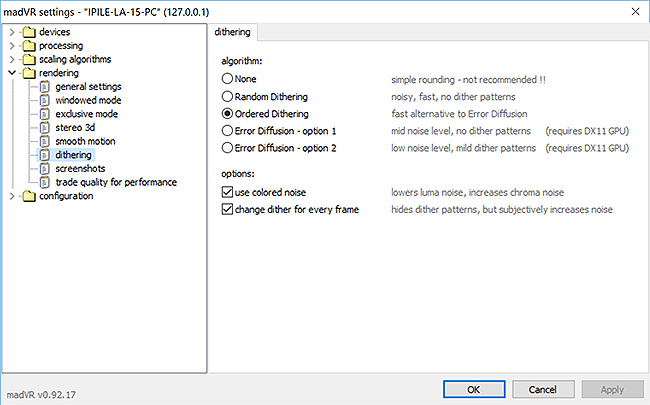

- Dithering: Ordered Dithering

I am upscaling primarily high-quality, 24 fps content to 1080p24. These settings are very similar to those provided by Intel DXVA rendering in Kodi with the quality benefits provided by madVR and offer a small subjective improvement.

I also owned a HTPC that combined a

Nvidia GTX 750 Ti and Core 2 Duo CPU.

Adding a dedicated GPU allows the flexibility to use more of everything: more demanding scaling algorithms, artifact removal, sharpening and high-quality dithering.

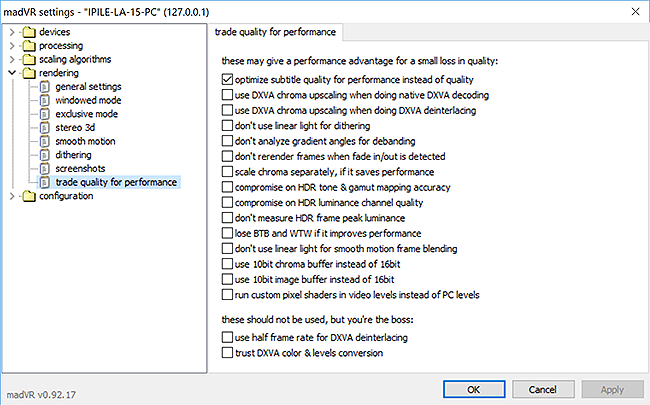

Settings assume all

trade quality for performance checkboxes are unchecked save the one related to subtitles.

Given the flexibility of a gaming GPU, four different scenarios are outlined based on common sources:

Display: 1920 x 1080p

Scaling factor: Increase in vertical resolution or pixels per inch.

Resizes:- 1080p -> 1080p

- 720p -> 1080p

- SD -> 1080p

- 4K UHD -> 1080p

Profile: "1080p"

1080p -> 1080p

1920 x 1080 -> 1920 x 1080

Increase in pixels:

0

Scaling factor:

0

Native 1080p sources require basic processing. The settings to be concerned with are

Chroma upscaling that is necessary for all videos, and

Dithering. The only upscaling taking place is the resizing of the subsampled chroma layer.

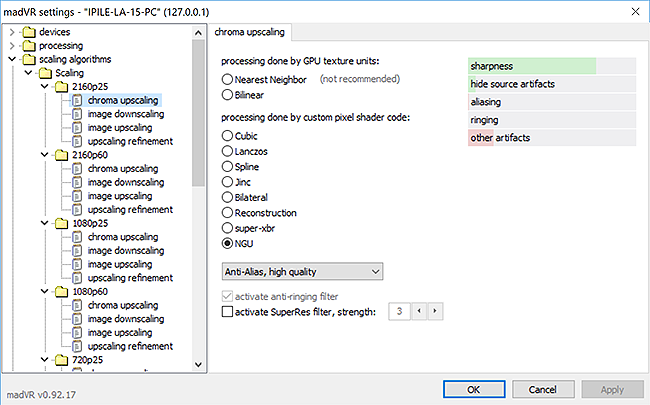

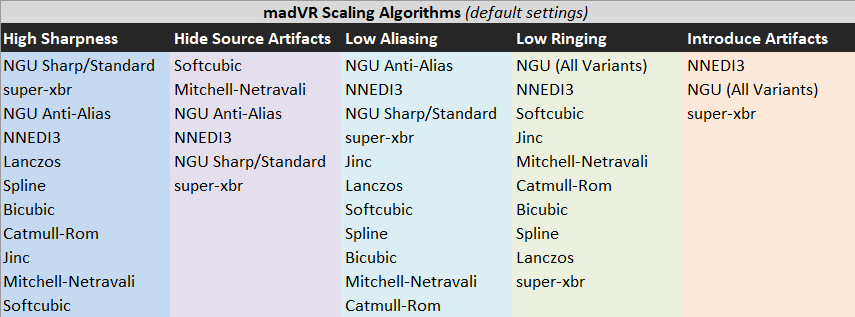

Chroma Upscaling: Doubles the 2:0 of a 4:2:0 source to match the native resolution of the luma layer (upscale to 4:4:4 and convert to RGB). Chroma upscaling is where the majority of your resources should go with native sources. My preference is for

NGU Anti-Alias over

NGU Sharp because it seems better-suited for upscaling the soft chroma layer. The sharp, black and white luma and soft chroma can often benefit from different treatment. It can be difficult to directly compare chroma upscaling algorithms without a good chroma upsampling test pattern.

Reconstruction,

NGU Sharp,

NGU Standard and

super-xbr100 are also good choices.

Comparison of Chroma Upscaling Algorithms

Read the following post before choosing a chroma upscaling algorithm

Image Downscaling: N/A.

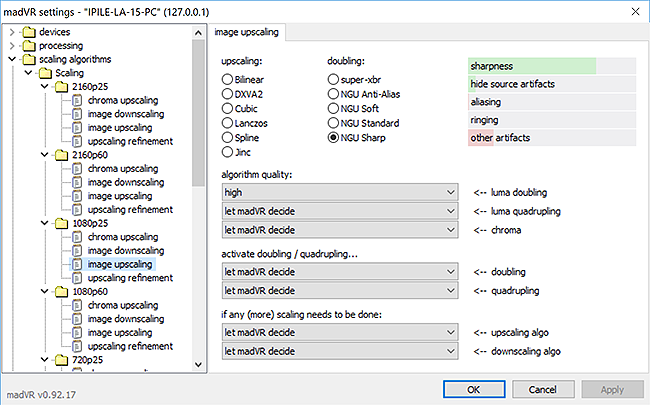

Image Upscaling: Set this to

Jinc + AR in case some pixels are missing. This setting should be ignored, however, as there is no upscaling involved at 1080p.

Image Doubling: N/A.

Upscaling Refinement: N/A.

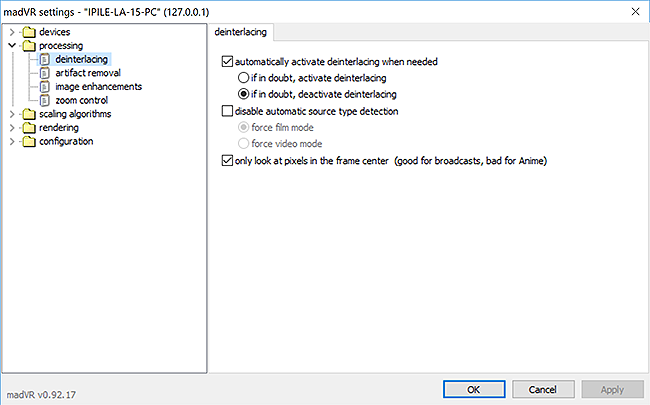

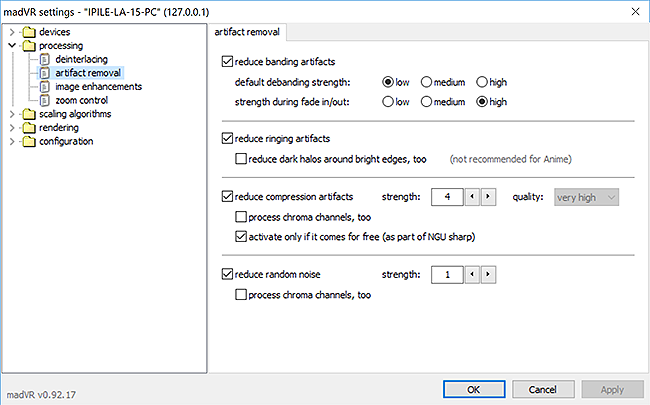

Artifact Removal: Artifact removal includes

Debanding,

Deringing,

Deblocking and

Denoising. I typically choose to leave

Debanding enabled at a low value because it is hard to find 8-bit sources that don't display some form of color banding, even when the source is an original Blu-ray rip. Banding is a common artifact and madVR's debanding algorithm is fairly effective. To avoid removing image detail, a setting of

low/medium or

medium/medium is advisable. You might choose to disable this if you desire the sharpest image possible.

Deringing,

Deblocking and

Denoising are not typically general use settings. These types of artifacts are less common, or the artifact removal algorithm can be guilty of smoothing an otherwise clean source. If you want to use these algorithms with your worst cases, try using madVR's keyboard shortcuts. This will allow you to quickly turn the algorithm on and off with your keyboard when needed and all profiles will simply reset when the video is finished.

Used in small amounts, artifact removal can improve image quality without having a significant impact on image detail. Some choose to offset any loss of image sharpness by adding a small amount of sharpening shaders.

Deblocking is useful for cleaning up compressed video. Even sources that have undergone light compression can benefit from it without harming image detail when low values are used.

Deringing is very effective for any sources with noticeable edge enhancement. And

Denoising will harm image detail, but can often be the only way to remove bothersome video noise or film grain. Some may believe

Deblocking,

Deringing or

Denoising are general use settings, while others may not.

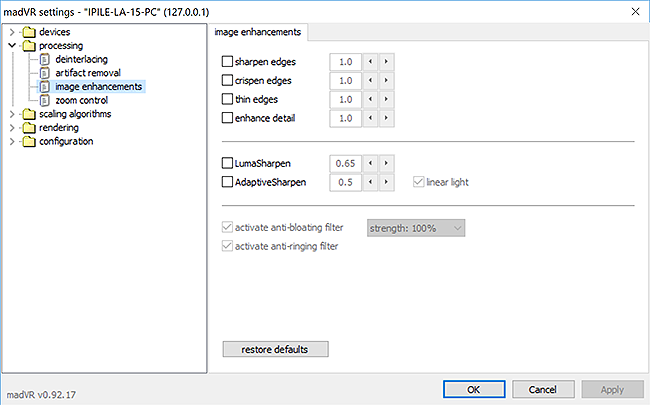

Image Enhancements: It should be unnecessary to apply sharpening shaders to the image as the source is already assumed to be of high-quality. If your display is calibrated, the image you get should approximate the same image seen on the original mastering monitor. Adding some

image enhancements may still be attractive for those who feel chroma upscaling alone is not doing enough to create a sharp picture and want more depth and texture detail.

Dithering: The last step before presentation. The difference between

Ordered Dithering and

Error Diffusion is quite small, especially if the bit depth is 8-bits or greater. But if you have the resources, you might as well use them, and

Error Diffusion will produce a small quality improvement. The slight performance difference between

Ordered Dithering and

Error Diffusion is a way to save a few resources when you need them. You aren't supposed to see dithering, anyways.

1080p:- Chroma: NGU Anti-Alias (high)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Jinc + AR

- Image doubling: Off

- Upscaling refinement: Off

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Some enhancement can be applied to native sources with supersampling.

Supersampling involves doubling a source to twice its original size and then returning it to its original resolution. The chain would look like this:

Image doubling -> Upscaling refinement (optional) -> Image downscaling. Doubling a source and reducing it to a smaller image can lead to a sharper image than what you started with without actually applying any sharpening to the image.

Chroma Upscaling: NGU Anti-Alias is selected. You may choose to use a higher quality level chroma upscaling setting than provided if your GPU is more powerful.

Image Downscaling: SSIM 1D + LL + AR + AB 100% is selected. It is best to use a sharp downscaler when supersampling to retain as much detail as possible from the larger doubled image. Either

SSIM 1D or

SSIM 2D are recommended as downscalers. These algorithms are both very sharp and produce minimal ringing artifacts.

SSIM 2D uses considerably more resources than

SSIM 1D, but provides the benefit of mostly eliminating any ringing artifacts caused by

image downscaling by using the softer

Jinc downscaling as a guide. So

SSIM 2D essentially downscales the image twice: through

Jinc-based interpolation followed by resizing the image to a lower resolution with

SSIM 2D.

Image Upscaling: N/A.

Image Doubling: Supersampling involves image doubling followed directly by image downscaling.

NGU Sharp is selected to make the image as sharp as possible before downscaling. Supersampling must be manually chosen:

image upscaling ->

doubling <-- activate doubling: ...always - supersampling.

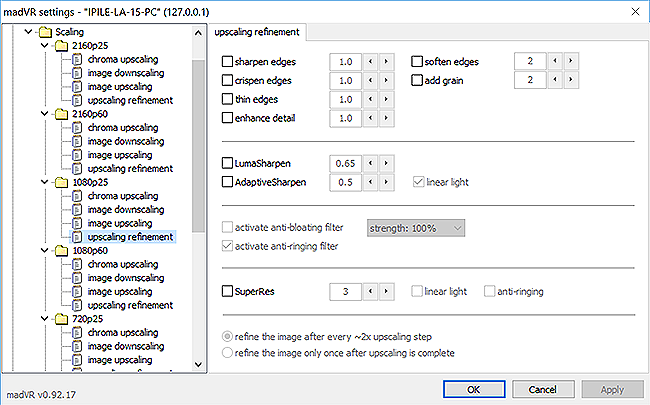

Upscaling Refinement: NGU Sharp is quite sharp. But you may want to add some extra sharpening to the doubled image;

crispen edges is a good choice.

Artifact Removal: Debanding is set to

low/medium.

Image Enhancements: soften edges is used at a low strength to make the edges of the image look more natural and less flat after

image downscaling is applied.

Dithering: Error Diffusion 2 is selected.

1080p -> 2160p Supersampling (for newer GPUs):- Chroma: NGU Anti-Alias (low)

- Downscaling: SSIM 1D 100% + LL + AR + AB 100%

- Image upscaling: Off

- Image doubling: NGU Sharp

- <-- Luma doubling: high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Sharp (low))

- <-- Chroma: let madVR decide (Bicubic60 + AR)

- <-- Doubling: ...always - supersampling

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Bicubic60 + AR)

- <-- Downscaling algo: use "image downscaling" settings

- Upscaling refinement: soften edges (1)

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

If you want to avoid any kind of sharpening or enhancement of native sources, avoid supersampling and use the first profile. If you want the sharpening effect to be more noticeable, applying

image enhancements to the native source will produce a greater sharpening effect than supersampling can provide.

Profile: "720p"

720p -> 1080p

1280 x 720 -> 1920 x 1080

Increase in pixels:

2.25x

Scaling factor:

1.5x

Image upscaling is introduced at 720p to 1080p.

Upscaling the sharp luma channel is most important in resolving image detail, so settings for

Image upscaling followed with

Upscaling refinement are most critical for upscaled sources.

Chroma Upscaling: NGU Anti-Alias is selected.

Image Downscaling: N/A.

Image Upscaling: Jinc + AR is the chosen image upscaler. We are upscaling by RGB directly from

720p ->

1080p.

Image Doubling: N/A.

Upscaling Refinement: SuperRes (1) is layered on top of

Jinc to provide additional sharpness. This is important as upscaling alone will create a noticeably soft image. Note that sharpening is added from

Upscaling refinement, so it is applied to the post-resized image.

Artifact Removal: Debanding is set to

low/medium.

Image Enhancements: N/A.

Dithering: Error Diffusion 2 is selected.

720p Regular upscaling:- Chroma: NGU Anti-Alias (medium)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Jinc + AR

- Image doubling: Off

- Upscaling refinement: SuperRes (1)

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Image doubling is another and often superior approach to upscaling a 720p source.

This will double the image (720p -> 1440p) and use

Image downscaling to correct the slight overscale (1440p -> 1080p).

Chroma Upscaling: NGU Anti-Alias is selected. Lowering the value of

chroma upscaling is an option when attempting to increase the quality of

image doubling. Always try to maximize

Luma doubling first, if possible. This is especially true if your display converts all 4:4:4 inputs to 4:2:2. Chroma upscaling could be wasted by the display's processing. The larger quality improvements will come from improving the luma layer, not the chroma, and it will always retain the full resolution when it reaches the display.

Image Downscaling: N/A.

Image Upscaling: N/A.

Image Doubling: NGU Sharp is used to double the image.

NGU Sharp is a staple choice for upscaling in madVR, as it produces the highest perceived resolution without oversharpening the image or usually requiring any enhancement from sharpening shaders.

Image doubling performs a 2x resize combined with image downscaling.

To calibrate image doubling, select

image upscaling -> doubling -> NGU Sharp and use the drop-down menus. Set

Luma doubling to its maximum value (

very high) and everything else to

let madVR decide.

If the maximum

luma quality value is too aggressive, reduce

Luma doubling until rendering times are under the movie frame interval (35-37ms for a 24 fps source). Leave the other settings to madVR.

Luma quality always comes first and is most important.

Think of

let madVR decide as madshi's expert recommendations for each upscaling scenario. This will help you avoid wasting resources on settings which do very little to improve image quality. So,

let madVR decide. When you become more advanced, you may consider manually adjusting these settings, but only expect small improvements. In this case, I've added

SSIM 1D for downscaling.

Luma & Chroma are upscaled separately:

Luma: RGB ->

Y'CbCr 4:4:4 ->

Y ->

720p ->

1440p ->

1080p

Chroma: RGB ->

Y'CbCr 4:4:4 ->

CbCr ->

720p ->

1080p

Keep in mind,

NGU very high is three times slower than

NGU high while only producing a small improvement in image quality. Attempting to a use a setting of

very high at all costs without considering GPU stress or high rendering times is not always a good idea.

NGU very high is the best way to upscale, but only if you can accommodate the considerable performance hit. Higher values of NGU will cause fine detail to be slightly more defined, but the overall appearance produced by each type (Anti-Alias, Soft, Standard, Sharp) will remain identical through each quality level.

Upscaling Refinement: NGU Sharp shouldn’t require any added sharpening. If you want the image to be sharper, you can check some options here such as

crispen edges or

sharpen edges.

Artifact Removal: Debanding is set to

low/medium.

Image Enhancements: N/A.

Dithering: Error Diffusion 2 is selected.

720p Image doubling:- Chroma: NGU Anti-Alias (medium)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Sharp

- <-- Luma doubling: high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Sharp (high))

- <-- Chroma: let madVR decide (Bicubic60 + AR)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Bicubic60 + AR)

- <-- Downscaling algo: SSIM 1D 100 AR Linear Light

- Upscaling refinement: Off

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Profile: "SD"

SD -> 1080p

640 x 480 -> 1920 x 1080

Increase in pixels:

6.75x

Scaling factor:

2.25x

By the time SD content is reached, the scaling factor starts to become quite large (2.25x). Here, the image becomes soft due to the errors introduced by upscaling. Countering this soft appearance is possible by introducing more sophisticated image upscaling provided by

madVR's image doubling. Image doubling does just that — it takes the full resolution luma and chroma information and scales it by factors of two to reach the desired resolution (2x for a double and 4x for a quadruple). If larger than needed, the result is interpolated down to the target.

Doubling a 720p source to 1080p involves overscaling by 0.5x and downscaling back to the target resolution. Improvements in image quality may go unnoticed in this case. However, image doubling applied to larger resizes of

540p to 1080p or

1080p to 2160p will, in most cases, result in the highest-quality image.

Chroma Upscaling: NGU Anti-Alias is selected.

Image Downscaling: N/A.

Image Upscaling: N/A.

Image Doubling: NGU Sharp is the selected image doubler.

Luma & Chroma are upscaled separately:

Luma: RGB ->

Y'CbCr 4:4:4 ->

Y ->

480p ->

960p ->

1080p

Chroma: RGB ->

Y'CbCr 4:4:4 ->

CbCr ->

480p ->

1080p

Upscaling Refinement: NGU Sharp shouldn’t require any added sharpening. If you want the image to be sharper, you can check some options here such as

crispen edges or

sharpen edges. If you find the image looks unnatural with

NGU Sharp, try adding some grain with

add grain or using another scaler such as

NGU Anti-Alias or

super-xbr100.

Artifact Removal: Debanding is set to

low/medium.

Image Enhancements: N/A.

Dithering: Error Diffusion 2 is selected.

SD Image doubling:- Chroma: NGU Anti-Alias (medium)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Sharp

- <-- Luma doubling: high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Sharp (high))

- <-- Chroma: let madVR decide (Bicubic60 + AR)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: Jinc AR

- <-- Downscaling algo: let madVR decide (Bicubic150 + LL + AR)

- Upscaling refinement: Off

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Profile: "4K UHD to 1080p"

2160p -> 1080p

3840 x 2160 -> 1920 x 1080

Decrease in pixels:

4x

Scaling factor:

-2x

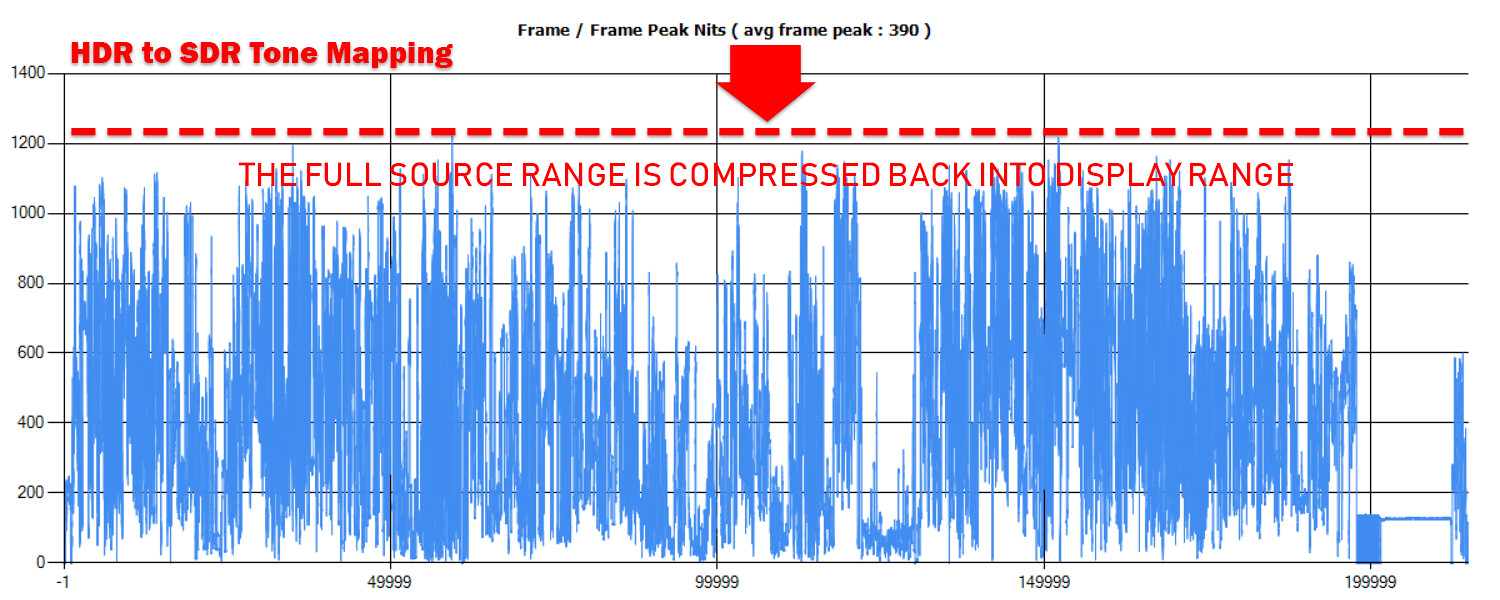

The last 1080p profile is for the growing number of people who want to watch 4K UHD content on a 1080p display. madVR offers a high-quality HDR to SDR conversion that can make watching HDR content palatable and attractive on an SDR display. This will apply to many that have put off upgrading to a 4K UHD display for various reasons. HDR to SDR is intended to replace the HDR picture mode of an HDR display. The conversion from HDR BT.2020/DCI-P3 to SDR BT.709 is excellent and perfectly matches the 1080p Blu-ray in many cases if they were mastered from the same source.

The example graphics card is a

GTX 1050 Ti outputting to an SDR display calibrated to

150 nits.

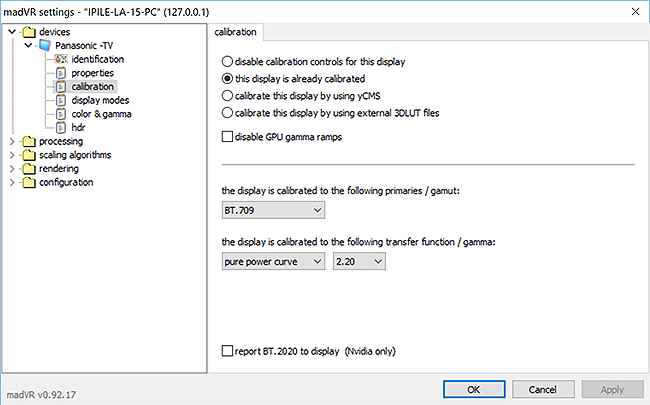

madVR is set to the following:

primaries / gamut: BT.709

transfer function / gamma: pure power curve 2.40

Note: The

transfer function / gamma setting only applies in madVR when HDR is converted to SDR and may need some adjustment.

Chroma Upscaling: Bicubic60 + AR is selected. Chroma upscaling to 3840 x 2160p before image downscaling is generally a waste of resources. If you check

scale chroma separately, if it saves performance under

trade quality for performance, chroma upscaling is disabled because the native resolution of the chroma layer is already 1080p. This is exactly what you should do. The performance savings will allow you to use higher values for image downscaling.

Image Downscaling: SSIM 2D + LL + AR + AB 100% is selected. Image downscaling can also be a drag on performance but is obviously necessary when reducing from 4K UHD to 1080p.

SSIM 2D is the sharpest image downscaler in madVR and the best choice to preserve detail from the larger 4K UHD source.

SSIM 1D and

Bicubic150 are also good, sharp downscalers.

DXVA2 is the fastest (and lowest quality) option.

Image Upscaling: N/A.

Image Doubling: N/A.

Upscaling Refinement: N/A.

Artifact Removal: Artifact removal is disabled. The source is assumed to be an original high-quality, 4K UHD rip.

Some posterization can be caused by tone mapping compression. However, this cannot be detected or addressed by madVR's

artifact removal. I recommend disabling debanding for 4K UHD content as 10-bit HEVC should take care of most source banding issues.

Image Enhancements: N/A

Dithering: Error Diffusion 2 is selected. Reducing a 10-bit source to 8-bits necessitates high-quality dithering and the

Error Diffusion algorithms are the best dithering algorithms available.

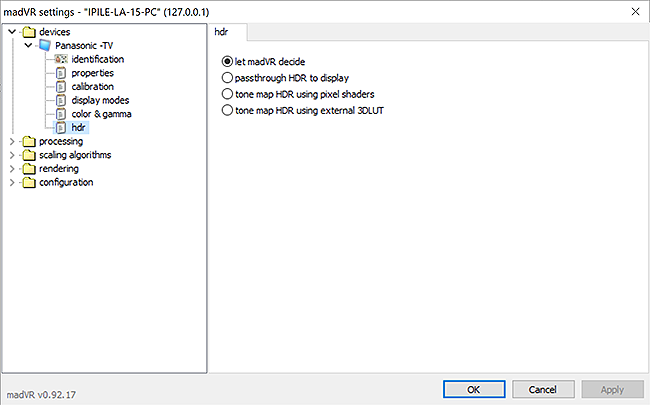

HDR: tone map HDR using pixel shaders

target peak nits: 275 nits. The target nits value can be thought of as a dynamic range slider. You increase it to preserve the high dynamic range and contrast of the source at the expense of making the image darker. And decrease it to create brighter images at the expense of compressing or clipping the source contrast. If this value is set too low, the gamma will become raised and the image will end up washed out. A good static value should provide a middle ground for sources with both a high or low dynamic range.

HDR to SDR Tone Mapping Explained

tone mapping curve: BT.2390.

color tweaks for fire & explosions: disabled. When enabled, bright reds and oranges are shifted towards yellow to compensate for changes in the appearance of fire and explosions caused by tone mapping. This hue correction is meant to improve the appearance of fire and explosions alone, but applies to any scenes with bright red/orange pixels. I find there are more bright reds and oranges in a movie that aren't related to fire or explosions and prefer to have them appear as red as they were encoded. So I prefer to disable this shift towards yellow.

highlight recovery strength: medium. You run the risk slightly overcooking the image by enabling this setting, but tone mapping can often leave the image appearing overly flat in spots due to compression caused by the roll-off. So any help with texture detail is welcome. Another huge hog on performance. I prefer

medium as it seems most natural without giving the image a sharpened appearance. Higher values will make compressed portions of the image appear sharper, but they also invite the possibility of introducing ringing artifacts from aggressive enhancement or simply making the image appear unnatural.

highlight recovery strength should be set to none for 4K 60 fps sources. This shader is simply too expensive for 60 fps content.

measure each frame's peak luminance: checked.

Note: trade quality for performance checkbox compromise on tone & gamut mapping accuracy should be unchecked. The quality of tone mapping goes down considerably when this is enabled, so avoid using it if possible. It should only be considered a last resort.

4K UHD to 1080p Downscaling:- Chroma: Bicubic60 + AR

- Downscaling: SSIM 2D 100% + LL + AR + AB 100%

- Image upscaling: Jinc + AR

- Image doubling: Off

- Upscaling refinement: Off

- Artifact removal - Debanding: Off

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

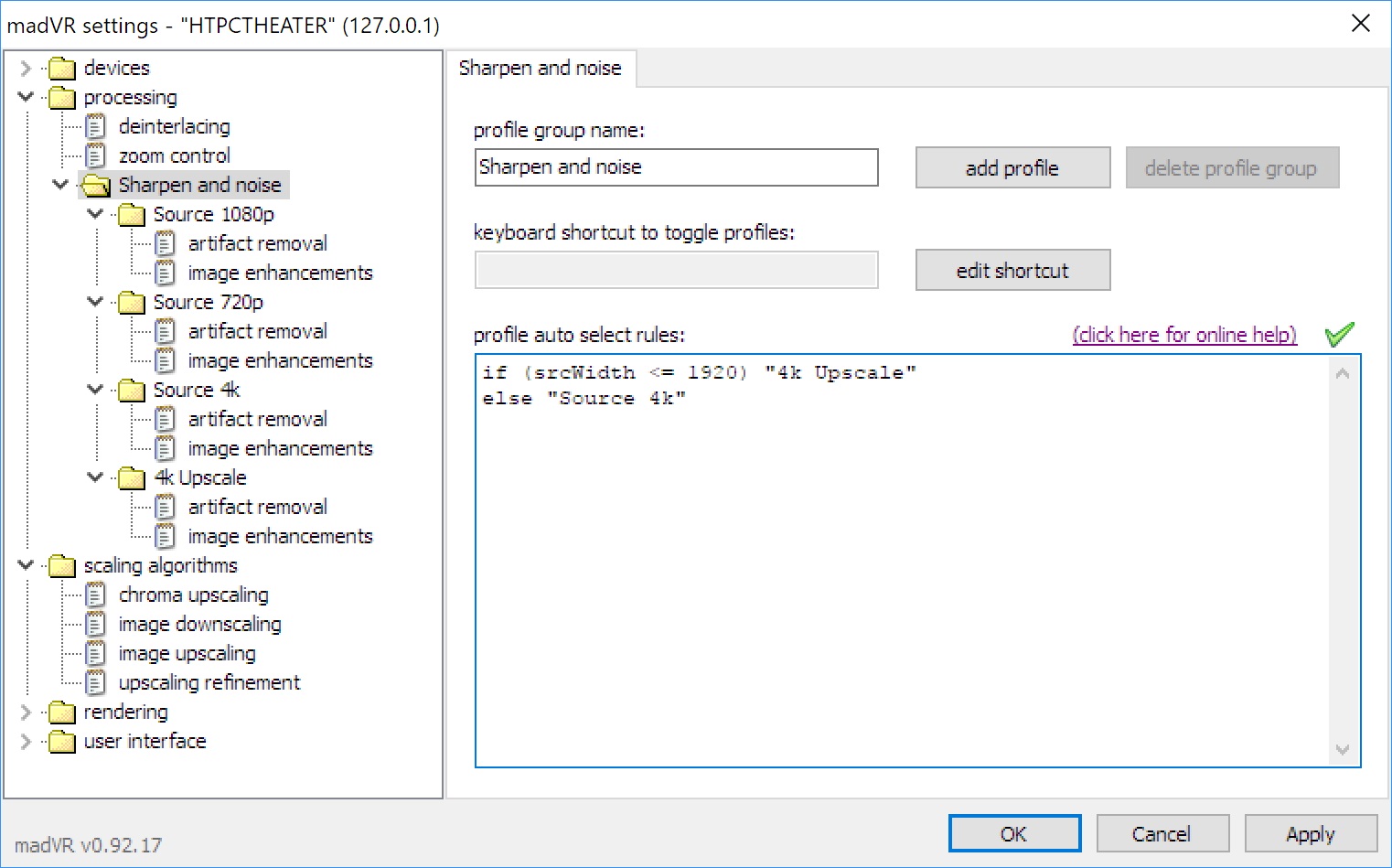

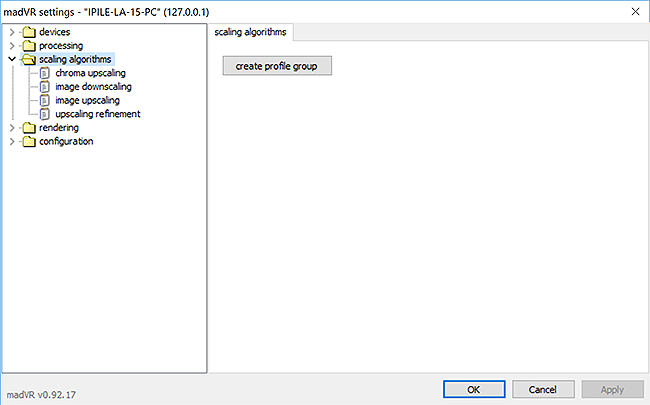

Creating madVR Profiles

Now we will translate each profile into a resolution profile with profile rules.

Add this code to each profile group:

if (srcHeight > 1080) "2160p"

else if (srcWidth > 1920) "2160p"

else if (srcHeight > 720) and (srcHeight <= 1080) "1080p"

else if (srcWidth > 1280) and (srcWidth <= 1920) "1080p"

else if (srcHeight > 576) and (srcHeight <= 720) "720p"

else if (srcWidth > 960) and (srcWidth <= 1280) "720p"

else if (srcHeight <= 576) and (srcWidth <= 960) "SD"

deintFps (the source frame rate after deinterlacing) is another factor on top of the source resolution that greatly impacts the load placed on madVR. Doubling the frame rate, for example, doubles the demands placed on madVR. Profile rules such as

(deintFps <= 25) and

(deintFps > 25) may be combined with

srcWidth and

srcHeight to create additional profiles.

A more "fleshed-out" set of profiles incorporating the source frame rate might look like this:- "2160p25"

- "2160p60"

- "1080p25"

- "1080p60"

- "720p25"

- "720p60"

- "SD25"

- "SD60"

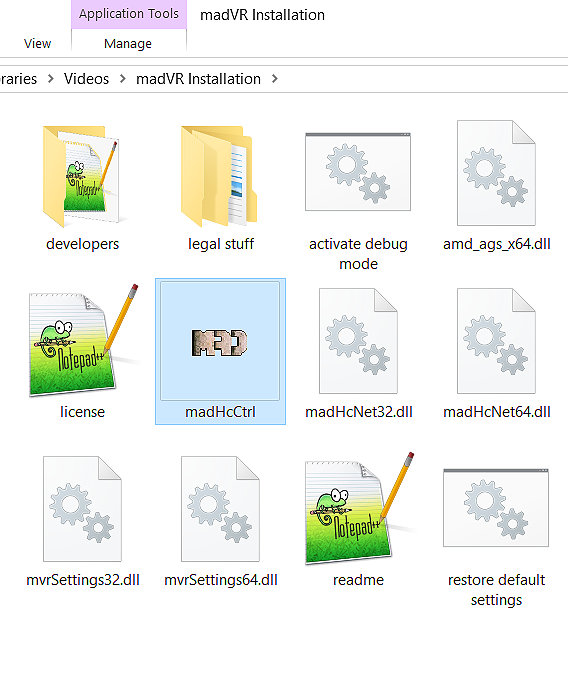

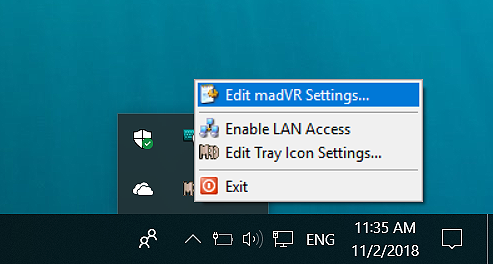

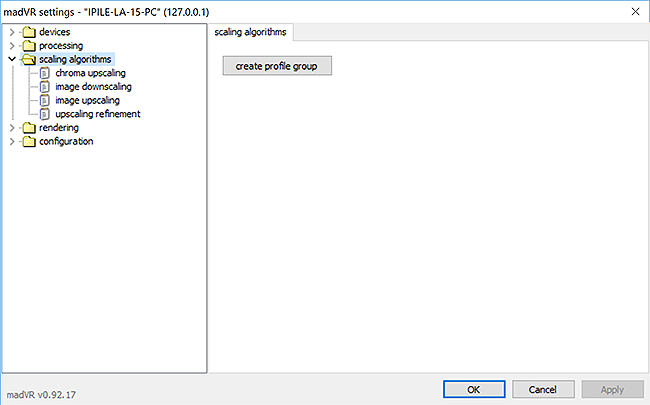

Click on

scaling algorithms. Create a new folder by selecting

create profile group.

Each profile group offers a choice of settings to include.

Select all items, and name the new folder

"Scaling."

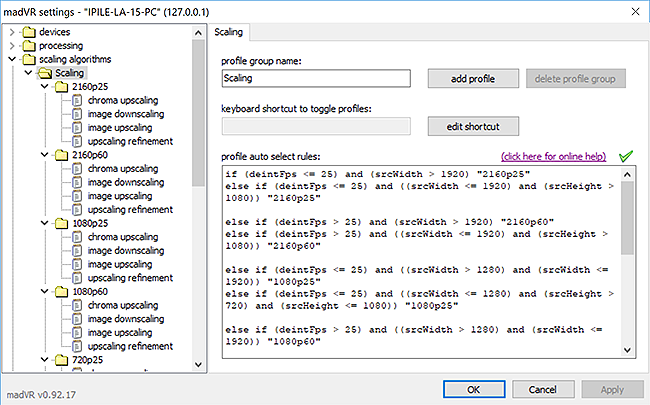

Select the

Scaling folder. Using

add profile, create

eight profiles.

Name each profile:

2160p25,

2160p60,

1080p25,

1080p60,

720p25,

720p60,

576p25,

576p60.

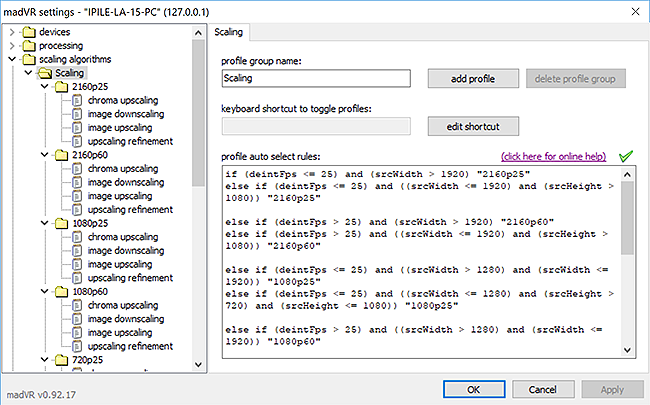

Copy and paste the code below into

Scaling:

if (deintFps <= 25) and (srcHeight > 1080) "2160p25"

else if (deintFps <= 25) and (srcWidth > 1920) "2160p25"

else if (deintFps > 25) and (srcHeight > 1080) "2160p60"

else if (deintFps > 25) and (srcWidth > 1920) "2160p60"

else if (deintFps <= 25) and ((srcHeight > 720) and (srcHeight <= 1080)) "1080p25"

else if (deintFps <= 25) and ((srcWidth > 1280) and (srcWidth <= 1920)) "1080p25"

else if (deintFps > 25) and ((srcHeight > 720) and (srcHeight <= 1080)) "1080p60"

else if (deintFps > 25) and ((srcWidth > 1280) and (srcWidth <= 1920)) "1080p60"

else if (deintFps <= 25) and ((srcHeight > 576) and (srcHeight <= 720)) "720p25"

else if (deintFps <= 25) and ((srcWidth > 960) and (srcWidth <= 1280)) "720p25"

else if (deintFps > 25) and ((srcHeight > 576) and (srcHeight <= 720)) "720p60"

else if (deintFps > 25) and ((srcWidth > 960) and (srcWidth <= 1280)) "720p60"

else if (deintFps <= 25) and ((srcWidth <= 960) and (srcHeight <= 576)) "576p25"

else if (deintFps > 25) and ((srcWidth <= 960) and (srcHeight <= 576)) "576p60"

A

green check mark should appear above the box to indicate the profiles are correctly named and no code conflicts exist.

Additional profile groups must be created for

processing and

rendering.

Note: The use of eight profiles may be unnecessary for other profile groups. For instance, if I wanted

image enhancements (under

processing) to only apply to 1080p content, two folders would be required:

if (srcHeight > 720) and (srcHeight <= 1080) "1080p"

else if (srcWidth > 1280) and (srcWidth <= 1920) "1080p"

else "Other"

How to Configure madVR Profile Rules

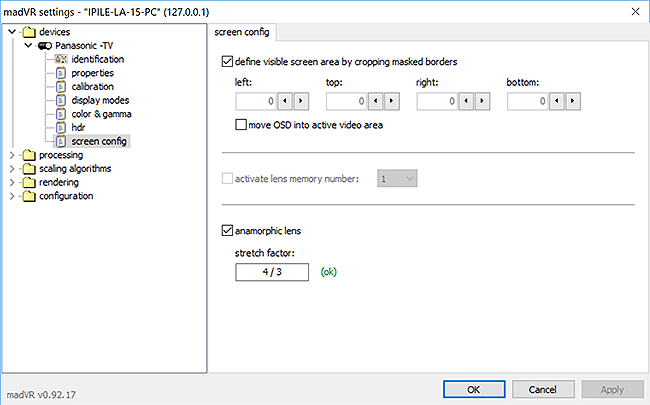

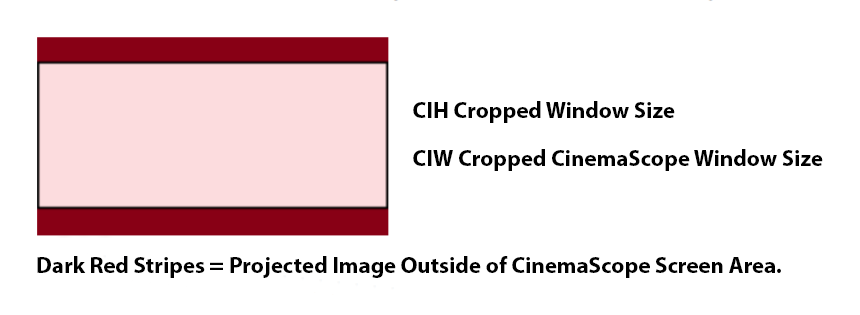

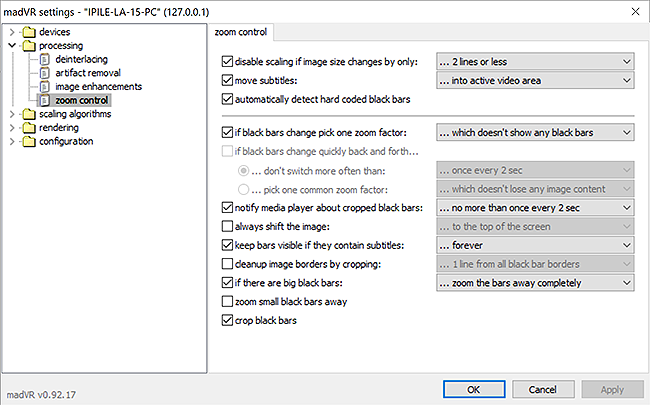

Disabling Image upscaling for Cropped Videos:

You may encounter some 1080p or 2160p videos cropped just short of their original size (e.g.,

width = 1916). Those few missing pixels will put an abnormal strain on madVR as it tries to resize to the original display resolution.

zoom control in the madVR control panel contains a setting to disable image upscaling if the video falls within a certain range (e.g., 10 lines or less). Disabling scaling adds a few black pixels to the video and prevents the image upscaling algorithm from resizing the image. This may prevent cropped videos from pushing rendering times over the

frame interval.

Display: 3840 x 2160p

Let's repeat this process, this time assuming the display resolution is

3840 x 2160p (4K UHD). Two graphics cards will be used for reference. A

Medium-level card such as the

GTX 1050 Ti, and a

High-level card similar to a

GTX 1080 Ti. Again, the source is assumed to be of high quality with a frame rate of

24 fps.

Scaling factor: Increase in vertical resolution or pixels per inch.

Resizes:- 2160p -> 2160p

- 1080p -> 2160p

- 720p -> 2160p

- SD -> 2160p

Profile: "2160p"

2160p -> 2160p

3840 x 2160 -> 3840 x 2160

Increase in pixels:

0

Scaling factor:

0

This profile is identical in appearance to that for a 1080p display. Without image upscaling, the focus is on settings for

Chroma upscaling that is necessary for all videos, and

Dithering. The only upscaling taking place is the resizing of the subsampled chroma layer.

Chroma Upscaling: Doubles the 2:0 of a 4:2:0 source to match the native resolution of the luma layer (upscale to 4:4:4 and convert to RGB). Chroma upscaling is where the majority of your resources should go with native sources. My preference is for

NGU Anti-Alias over

NGU Sharp because it seems better-suited for upscaling the soft chroma layer. The sharp, black and white luma and soft chroma can often benefit from different treatment. It can be difficult to directly compare chroma upscaling algorithms without a good chroma upsampling test pattern.

Reconstruction,

NGU Sharp,

NGU Standard and

super-xbr100 are also good choices.

Comparison of Chroma Upscaling Algorithms

Read the following post before choosing a chroma upscaling algorithm

Image Downscaling: N/A.

Image Upscaling: Set this to

Jinc + AR in case some pixels are missing. This setting should be ignored, however, as there is no upscaling involved at 2160p.

Image Doubling: N/A.

Upscaling Refinement: N/A.

Artifact Removal: Artifact removal includes

Debanding,

Deringing,

Deblocking and

Denoising. I typically choose to leave

Debanding enabled at a low value, but this should be less of an issue with 10-bit 4K UHD sources compressed by HEVC. So we will save debanding for other profiles.

Deringing,

Deblocking and

Denoising are not typically general use settings. These types of artifacts are less common, or the artifact removal algorithm can be guilty of smoothing an otherwise clean source. If you want to use these algorithms with your worst cases, try using madVR's keyboard shortcuts. This will allow you to quickly turn the algorithm on and off with your keyboard when needed and all profiles will simply reset when the video is finished.

Used in small amounts, artifact removal can improve image quality without having a significant impact on image detail. Some choose to offset any loss of image sharpness by adding a small amount of sharpening shaders.

Deblocking is useful for cleaning up compressed video. Even sources that have undergone light compression can benefit from it without harming image detail when low values are used.

Deringing is very effective for any sources with noticeable edge enhancement. And

Denoising will harm image detail, but can often be the only way to remove bothersome video noise or film grain. Some may believe

Deblocking,

Deringing or

Denoising are general use settings, while others may not.

Image Enhancements: It should be unnecessary to apply sharpening shaders to the image as the source is already assumed to be of high-quality. If your display is calibrated, the image you get should approximate the same image seen on the original mastering monitor. Adding some

image enhancements may still be attractive for those who feel chroma upscaling alone is not doing enough to create a sharp picture and want more depth and texture detail.

Dithering: The last step before presentation. The difference between

Ordered Dithering and

Error Diffusion is quite small, especially if the bit depth is 8-bits or greater. But if you have the resources, you might as well use them, and

Error Diffusion will produce a small quality improvement. The slight performance difference between

Ordered Dithering and

Error Diffusion is a way to save a few resources when you need them. You aren't supposed to see dithering, anyways.

With madVR set to 8-bit output, I would recommended

Error Diffusion. Reducing a source from 10-bits to 8-bits with dithering invites the use of higher-quality dithering.

Both

Medium and

High profiles use

Error Diffusion 2.

HDR: For HDR10 content, read the instructions in

Devices ->

HDR. Simple passthrough involves a few checkboxes. AMD users must output from madVR at 10-bits (but 8-bit output from the GPU is still possible).

Medium:- Chroma: NGU Anti-Alias (medium)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Jinc + AR

- Image doubling: Off

- Upscaling refinement: Off

- Artifact removal - Debanding: Off

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

High:- Chroma: NGU Anti-Alias (high)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Jinc + AR

- Image doubling: Off

- Upscaling refinement: Off

- Artifact removal - Debanding: Off

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Profile: "Tone Mapping HDR"

This profile makes one small adjustment to the one above for anyone using

tone map HDR using pixel shaders. madVR’s tone mapping can be very resource-heavy with all of the HDR enhancements enabled. To make room, I would recommend simply reducing the value of chroma upscaling to

Bicubic60 + AR. Bicubic is more than acceptable as a basic chroma upscaler and is not in any way as impactful as madVR’s tone mapping in improving image quality.

HDR to SDR Tone Mapping Explained

Recommended checkboxes:

color tweaks for fire & explosions: disabled or balanced

highlight recovery strength: medium-high

measure each frame's peak luminance: checked

tone map HDR using pixel shaders:- Chroma: Bicubic60 + AR

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Jinc + AR

- Image doubling: Off

- Upscaling refinement: Off

- Artifact removal - Debanding: Off

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Profile: "1080p"

1080p -> 2160p

1920 x 1080 -> 3840 x 2160

Increase in pixels:

4x

Scaling factor:

2x

A 1080p source requires image upscaling.

For upscaling FHD content to UHD, image doubling is a perfect match for the 2x resize.

Chroma Upscaling: NGU Anti-Alias is selected. Lowering the value of

chroma upscaling is an option when attempting to increase the quality of

image doubling. Always try to maximize

Luma doubling first, if possible. This is especially true if your display converts all 4:4:4 inputs to 4:2:2. Chroma upscaling could be wasted by the display's processing. The larger quality improvements will come from improving the luma layer, not the chroma, and it will always retain the full resolution when it reaches the display.

Image Downscaling: N/A.

Image Upscaling: N/A.

Image Doubling: NGU Sharp is used to double the image.

NGU Sharp is a staple choice for upscaling in madVR, as it produces the highest perceived resolution without oversharpening the image or usually requiring any enhancement from sharpening shaders.

Image doubling performs a 2x resize.

To calibrate image doubling, select

image upscaling -> doubling -> NGU Sharp and use the drop-down menus. Set

Luma doubling to its maximum value (

very high) and everything else to

let madVR decide.

If the maximum

luma quality value is too aggressive, reduce

Luma doubling until rendering times are under the movie frame interval (35-37ms for a 24 fps source). Leave the other settings to madVR.

Luma quality always comes first and is most important.

Think of

let madVR decide as madshi's expert recommendations for each upscaling scenario. This will help you avoid wasting resources on settings which do very little to improve image quality. So,

let madVR decide. When you become more advanced, you may consider manually adjusting these settings, but only expect small improvements.

Luma & Chroma are upscaled separately:

Luma: RGB ->

Y'CbCr 4:4:4 ->

Y ->

1080p ->

2160p

Chroma: RGB ->

Y'CbCr 4:4:4 ->

CbCr ->

1080p ->

2160p

Keep in mind,

NGU very high is three times slower than

NGU high while only producing a small improvement in image quality. Attempting to a use a setting of

very high at all costs without considering GPU stress or high rendering times is not always a good idea.

NGU very high is the best way to upscale, but only if you can accommodate the considerable performance hit. Higher values of NGU will cause fine detail to be slightly more defined, but the overall appearance produced by each type (Anti-Alias, Soft, Standard, Sharp) will remain identical through each quality level.

Upscaling Refinement: NGU Sharp shouldn’t require any added sharpening. If you want the image to be sharper, you can check some options here such as

crispen edges or

sharpen edges.

Artifact Removal: Debanding is set to

low/medium. Most 8-bit sources, even uncompressed Blu-rays, can display small amounts of banding in large gradients because they don't compress as well as 10-bit sources. So I find it helpful to use a small amount of

debanding to help with these artifacts as they are so common with 8-bit video. To avoid removing image detail, a setting of

low/medium or

medium/medium is advisable. You might choose to disable this if you desire the sharpest image possible.

Image Enhancements: N/A.

Dithering: Both

Medium and

High profiles use

Error Diffusion 2.

Medium:- Chroma: NGU Anti-Alias (low)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Sharp

- <-- Luma doubling: high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Sharp (high))

- <-- Chroma: let madVR decide (Bicubic60 + AR)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Bicubic60 + AR)

- <-- Downscaling algo: let madVR decide (Bicubic150 + LL + AR)

- Upscaling refinement: Off

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

High:- Chroma: NGU Anti-Alias (medium)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Sharp

- <-- Luma doubling: very high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Sharp (very high))

- <-- Chroma: let madVR decide (NGU medium)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Jinc + AR)

- <-- Downscaling algo: let madVR decide (SSIM 1D 100% + LL + AR)

- Upscaling refinement: Off

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Profile: "720p"

720p -> 2160p

1280 x 720 -> 3840 x 2160

Increase in pixels:

9x

Scaling factor:

3x

At a 3x scaling factor, it is possible to quadruple the image.

The image is upscaled 4x and downscaled by 1x (reduced 25%) to match the output resolution. This is the lone change from Profile 1080p. If quadrupling is used, it is best combined with sharp

Image downscaling such as

SSIM 1D or

Bicubic150.

Chroma Upscaling: NGU Anti-Alias is selected.

Image Downscaling: N/A.

Image Upscaling: N/A.

Image Doubling: NGU Sharp is the selected image doubler.

Image doubling performs a 4x resize combined with image downscaling.

Luma & Chroma are upscaled separately:

Luma: RGB ->

Y'CbCr 4:4:4 ->

Y ->

720p ->

2880p ->

2160p

Chroma: RGB ->

Y'CbCr 4:4:4 ->

CbCr ->

720p ->

2160p

Upscaling Refinement: NGU Sharp shouldn’t require any added sharpening. If you want the image to be sharper, you can check some options here such as

crispen edges or

sharpen edges.

soften edges is used to correct any oversharp edges created by using

NGU Sharp with such a large scaling factor.

soften edges will apply a very small correction to all edges without having much impact on image detail. Some may also want to experiment with

add grain with large upscales for similar reasons.

NGU Sharp |

NGU Sharp + soften edges + add grain |

Jinc + AR

Artifact Removal: Debanding is set to

low/medium.

Image Enhancements: N/A.

Dithering: Both

Medium and

High profiles use

Error Diffusion 2.

Medium:- Chroma: NGU Anti-Alias (low)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Sharp

- <-- Luma doubling: high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Sharp (high))

- <-- Chroma: let madVR decide (Bicubic60 + AR)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Bicubic60 + AR)

- <-- Downscaling algo: let madVR decide (Bicubic150 + LL + AR)

- Upscaling refinement: soften edges (2)

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

High:- Chroma: NGU Anti-Alias (medium)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Sharp

- <-- Luma doubling: very high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Sharp (very high))

- <-- Chroma: let madVR decide (NGU medium)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Jinc + AR)

- <-- Downscaling algo: let madVR decide (SSIM 1D 100% + LL + AR)

- Upscaling refinement: soften edges (2)

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Profile: "SD"

SD -> 2160p

640 x 480 -> 3840 x 2160

Increase in pixels:

27x

Scaling factor:

4.5x

The final resize, SD to 2160p, is a monster (4.5x!). This is perhaps the only scenario where image quadrupling is not only useful but necessary to maintain the integrity of the original image.

The image is upscaled 4x by image doubling and the remaining 0.5x by the

Upscaling algo.

Chroma Upscaling: NGU Anti-Alias is selected.

Image Downscaling: N/A.

Image Upscaling: N/A.

Image Doubling: Because we are upscaling SD sources,

NGU Standard will be substituted for

NGU Sharp. You may find that

NGU Sharp can start to look a little unnatural or "plastic" when a lower-quality source is upscaled to a much higher resolution. It can be beneficial to substitute

NGU Sharp for a slightly softer variant of NGU such as

NGU Standard to reduce this plastic appearance without losing too much of the desired sharpness and detail. The even softer

NGU Anti-Alias is also option.

Image doubling performs a 4x resize combined with image upscaling.

Luma & Chroma are upscaled separately:

Luma: RGB ->

Y'CbCr 4:4:4 ->

Y ->

480p ->

1920p ->

2160p

Chroma: RGB ->

Y'CbCr 4:4:4 ->

CbCr ->

480p ->

2160p

Upscaling Refinement: If you want the image to be sharper, try adding a small level of

crispen edges or

sharpen edges.

Both

soften edges and

add grain are used to mask some of the lost texture detail caused by upsampling a lower-quality SD source to a much higher resolution. When performing such a large upscale, the upscaler can sometimes keep the edges of the image quite sharp, but still fail to recreate all of the necessary texture detail. The grain used by madVR will add some missing texture detail to the image without looking noisy or unnatural due to its very fine structure.

Artifact Removal: Debanding is set to

low/medium.

Image Enhancements: N/A.

Dithering: Both

Medium and

High profiles use

Error Diffusion 2.

Medium:- Chroma: NGU Anti-Alias (low)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Standard

- <-- Luma doubling: high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Standard (high))

- <-- Chroma: let madVR decide (Bicubic60 + AR)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Bicubic60 + AR)

- <-- Downscaling algo: let madVR decide (Bicubic150 + LL + AR)

- Upscaling refinement: soften edges (1); add grain (3)

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

High:- Chroma: NGU Anti-Alias (medium)

- Downscaling: SSIM 1D 100% + LL + AR

- Image upscaling: Off

- Image doubling: NGU Standard

- <-- Luma doubling: very high

- <-- Luma quadrupling: let madVR decide (direct quadruple - NGU Standard (very high))

- <-- Chroma: let madVR decide (NGU medium)

- <-- Doubling: let madVR decide (scaling factor 1.2x (or bigger))

- <-- Quadrupling: let madVR decide (scaling factor 2.4x (or bigger))

- <-- Upscaling algo: let madVR decide (Jinc + AR)

- <-- Downscaling algo: let madVR decide (SSIM 1D 100% + LL + AR)

- Upscaling refinement: soften edges (1); add grain (3)

- Artifact removal - Debanding: low/medium

- Artifact removal - Deringing: Off

- Artifact removal - Deblocking: Off

- Artifact removal - Denoising: Off

- Image enhancements: Off

- Dithering: Error Diffusion 2

Creating madVR Profiles

These profiles can be translated into madVR profile rules.

Add this code to each profile group:

if (srcHeight > 1080) "2160p"

else if (srcWidth > 1920) "2160p"

else if (srcHeight > 720) and (srcHeight <= 1080) "1080p"

else if (srcWidth > 1280) and (srcWidth <= 1920) "1080p"

else if (srcHeight > 576) and (srcHeight <= 720) "720p"

else if (srcWidth > 960) and (srcWidth <= 1280) "720p"

else if (srcHeight <= 576) and (srcWidth <= 960) "SD"

OR

if (deintFps <= 25) and (srcHeight > 1080) "2160p25"

else if (deintFps <= 25) and (srcWidth > 1920) "2160p25"

else if (deintFps > 25) and (srcHeight > 1080) "2160p60"

else if (deintFps > 25) and (srcWidth > 1920) "2160p60"

else if (deintFps <= 25) and ((srcHeight > 720) and (srcHeight <= 1080)) "1080p25"

else if (deintFps <= 25) and ((srcWidth > 1280) and (srcWidth <= 1920)) "1080p25"

else if (deintFps > 25) and ((srcHeight > 720) and (srcHeight <= 1080)) "1080p60"

else if (deintFps > 25) and ((srcWidth > 1280) and (srcWidth <= 1920)) "1080p60"

else if (deintFps <= 25) and ((srcHeight > 576) and (srcHeight <= 720)) "720p25"

else if (deintFps <= 25) and ((srcWidth > 960) and (srcWidth <= 1280)) "720p25"

else if (deintFps > 25) and ((srcHeight > 576) and (srcHeight <= 720)) "720p60"

else if (deintFps > 25) and ((srcWidth > 960) and (srcWidth <= 1280)) "720p60"

else if (deintFps <= 25) and ((srcWidth <= 960) and (srcHeight <= 576)) "576p25"

else if (deintFps > 25) and ((srcWidth <= 960) and (srcHeight <= 576)) "576p60"

How to Configure madVR Profile Rules

Sony Reality Creation Processing Emulation

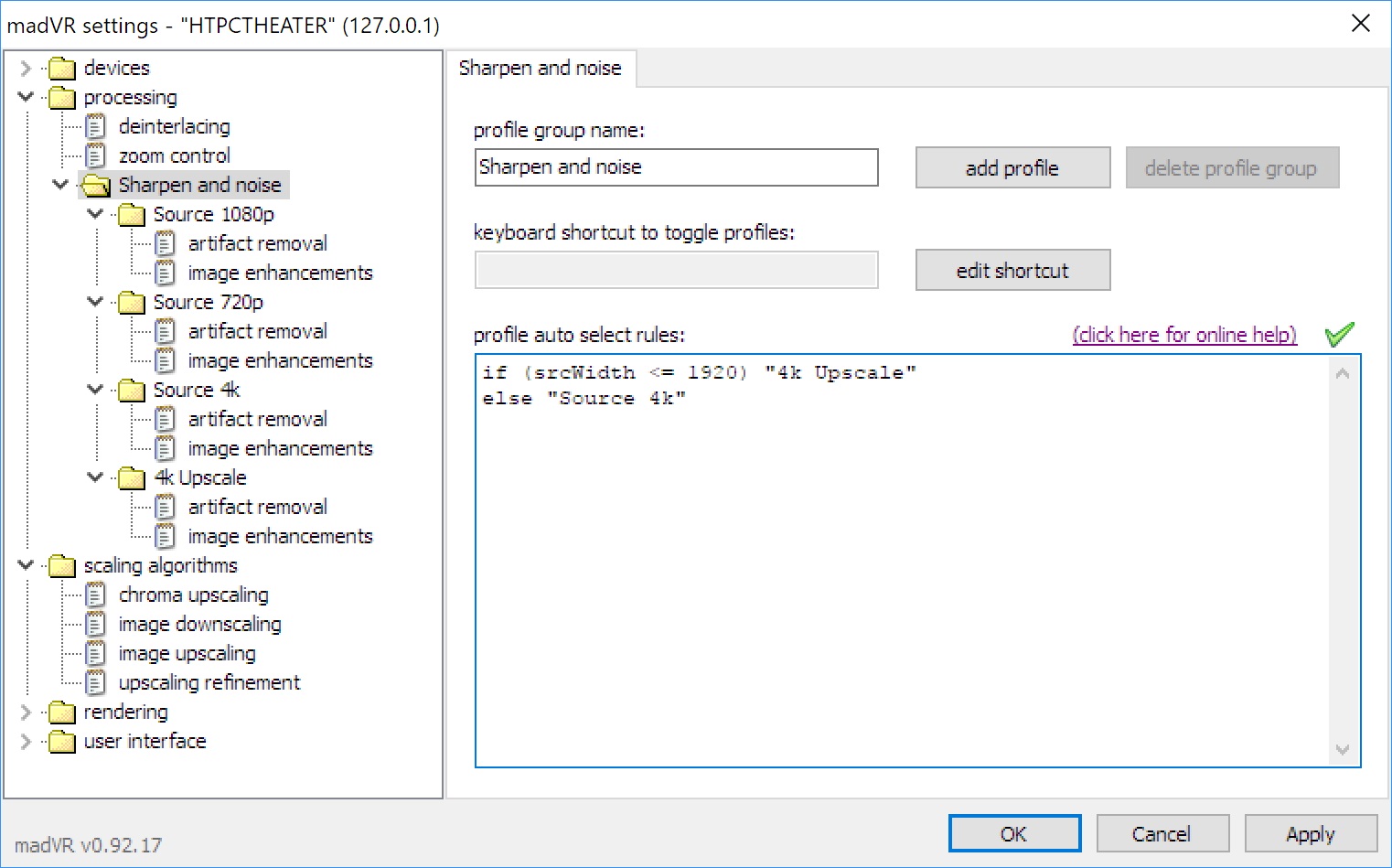

markmon1 at AVS Forum devised a set of settings that are meant to emulate the video processing used by Sony projectors and TVs. Sony’s Reality Creation processing combines advanced upscaling, sharpening/enhancement and noise reduction to reduce image noise while still rendering a very sharp image.

To match the result of Reality Creation in madVR, markmon lined-up a

Sony VPL-VW675ES and

JVC DLA-RS640 side-by-side with various settings checked in madVR until the projected image from the JVC resembled the projected image from the Sony. The settings profiles created for 1080p ("4k Upscale") and 4K UHD content utilize sharp upscaling in madVR combined with a little bit of sharpening shaders, noise reduction and artifact removal, all intended to slightly lower the noise floor of the image without compromising too much detail or sharpness.

Click here for a gallery of settings for Sony Realty Creation emulation in madVR